Sometimes we want things that are already indexed removed from the index. We remove pages from the index using the noindex directive. This directive is implemented through the on-page meta element, or more rarely through the HTTP header X-Robots tag (more here). The standard approach (not mine) for removing pages we don’t want crawled from the index is :

- Apply a noindex meta-tag to the offending pages.

- The noindex tag won’t be read unless the page is crawled by Googlebot. So leave the page open to crawl and wait for Google to crawl it, hopefully resulting in the pages dropping out of the index.

- Once the pages drop out of the index, implement robots.txt restrictions.

All the while, the offending pages (pages we don’t want crawled) are being crawled. This may include previously uncrawled pages. So Googlebot is just consuming terrible pages we don’t want crawled. Great. But what’s the alternative?

I don’t care very much about getting pages I’ve blocked with robots.txt removed from the index (I am in a minority of SEOs here). I just don’t think there’s any search benefit to be had from removing something that’s no longer being crawled by Google. That said, others do, and I thought there might be technique that makes everyone happy.

You can apparently use robots.txt as a way of implement the noindex directive.

I was originally exposed to the idea on the deepcrawl blog , and once again through Alec Bertram’s posts. The deepcrawl post lacked a test, so I didn’t take it on board, but had been mulling it over since.

If it works reliably on already blocked pages then it has a significant advantage over the other existing methods which require crawl to count.

Testing Robots.txt Noindex

Update – a live example can be seen in the second half of my post See What Other Affiliates Are Promoting.

For this test we’re using an old domain that used to take any old nonsense from SEO agencies. This domain picked up the guest-blogging penalty, and was shortly after blocked in robots.txt. The following remain in the index:

At the start of this test there are 21 of these awful blog post URLs appearing in Google. They are indexed, yet blocked in robots.txt. The robots.txt sitewide block was put in place just over two years ago, so these have been in the index for a long time without falling out. I think the stubbornness of these URLs is a virtue here.

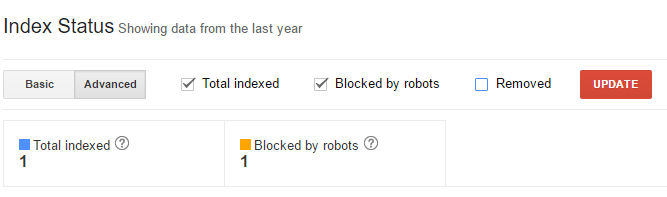

You can’t always trust search console.

Method

To test out the method I add the rule to robots.txt to target the same entries:

User-agent: * Disallow: / Noindex: /

I put the rule in place at 4.30pm on the 28th of April. I’m interested to see if this rule will result in the deindexation of pages which have been stubbornly in the index for many years.

Another subdomain was set up and allowed to index. If all goes well, a site search should only reveal the homepage of this subdomain. I’m a big fan of encouragement to get things crawled, but it’s clear that this site is likely to be very low in Google’s scheduling. And they shouldn’t be crawling it at all, given the robots.txt restriction.

Once suitably encouraged, we can see that Googlebot is requesting robots.txt, which we see by looking in the logs. So we wait, safe in the knowledge that Googlebot is not crawling the URLs we don’t want crawled.

Findings

Indexation was steady in the week before the test. Checking on the morning of May 1st, five had been removed from the index. On May 3rd (morning), a further URL dropped out of the index, leaving us with 15.

Two weeks later, there have been no changes since. 6 URLs have dropped out of the index, and then nothing.

Result…?

I’m an impatient sort, so I’ll be leaving it there and checking again periodically. The results are not fast enough or thorough enough for me to rely on (it works, just not well enough for my taste), but unlike other methods they do not risk Googlebot crawling things we do not want crawled.

Updates:

Update May 23rd: Google has graciously removed another.

Update May 30th: And another.

Update June 1st: And another.

Update September: I stopped monitoring this, but we’re down to 5 remaining.

Conclusion

In this test was a site that hasn’t been accessible to crawl for over two years. I think this makes for a good test – we are removing a particularly stubborn stain here. But because of this I also believe it’s a particularly slow test. The only exposure to those URLs is the ancient memory Google has of them (it can’t access the sitemap or internal links) and the submission to an indexing service. There’s also the possibility that Google is electing to keep these URLs in the index because of third party signals. Like the links agency types were hitting them with from 2011 to 2013.

On an actual live site with fewer restrictions, I believe the deindexing would be much quicker – there are more signals like internal links to trigger this psuedocrawl behaviour. Besides – do you know how long it takes google to remove a page from the index using a standard noindex tag?

To Use This

- Take your robots.txt file and copy + paste all of the “Disallow:” lines below the current rules.

- In the copy, find and replace “Disallow:” for “Noindex:”.

- Each disallow rule should now be paired with a noindex rule.

- See what happens.

Upside: You won’t make things worse.

Downside: It might not do anything.

Simply put:

In robots.txt, pair your disallow rules with noindex rules.

I’ll update the post should anything change (update above: they’re still being removed from the index)

Question – do you think you’ll be seeing this method in any robots.txt files soon?

I’ll give this a go on a few test (no algo or manual action domains).

Ah, there’s a good site architecture argument for using this over the “standard” noindex tag.

I do feel like having used the phrase “we are removing a particularly stubborn stain here” you’ve missed an opportunity to say that this method can “get your site: SERPs whiter than white”.