If you’re familiar with the blog or have encountered my work before you’ll know I find the definition of cloaking (serving different content to search engines and users) a bit inflexible.

In practice, search engines seem to differentiate Good Cloaking and Bad Cloaking like a prohibition-era judge defines obscenity (“I know it when I see it“).

Today I’ll be sharing the results of indulging in the “everyone-agrees-this-is-bad-why-would-you-do-this” flavour of cloaking.

While I’m not going to be stuffing my website with dick pill links (unfortunately a common occurrence, this site is frequently hacked), I am going to be showing something vastly different in content and intent to Search Engines and human users.

Let’s Do Some Cloaking.

I started cloaking the entire website out for humans on 26.06.2023. If you visited any of the pages controlled by WordPress, you would get the following experience:

A hostile approach to potential customers finding out about me is why I’m so good at business.

But Isn’t That Cloaking?

Yes.

(you can skim read this post if you want, but please try to keep up)

Cloaking Cloaking refers to the practice of presenting different content to users and search engines with the intent to manipulate search rankings and mislead users. Examples of cloaking include: Showing a page about travel destinations to search engines while showing a page about discount drugs to users. Inserting text or keywords into a page only when the user agent that is requesting the page is a search engine, not a human visitor. - https://developers.google.com/search/docs/essentials/spam-policies#cloaking

Assume I held both intents as I put the cloak in place. Let’s test if it’s working.

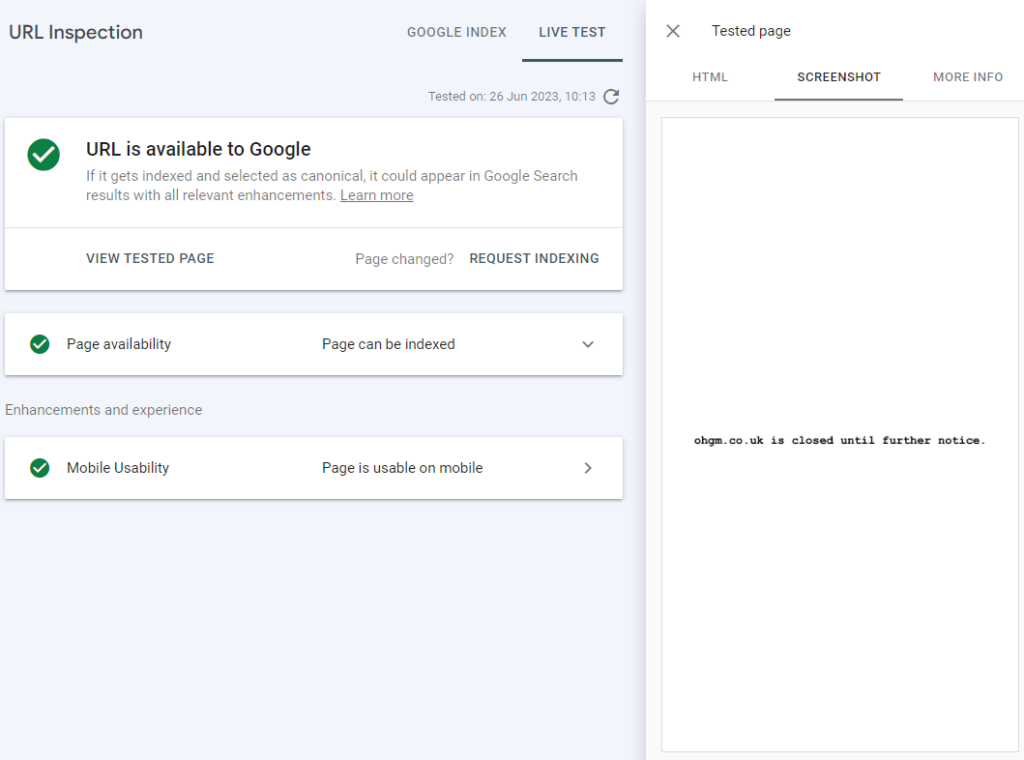

The tooling available to us no longer presents as Googlebot proper, so if I use the URL Inspection tool (or any of the other public facing tooling), I get the same experience as humans:

However, if we wait for things to get reindexed we can see that the cloak is working as desired:

Great. Clearly the bot experience is pretty good, and the human experience is absolutely terrible.

What Do You Predict Will Happen?

Making a prediction before reading the results is a great chance to test your instincts / ability to anticipate search engine behaviour. Considering rare scenarios can be a useful way to improve this semi-tangible skill. The test will run for one month.

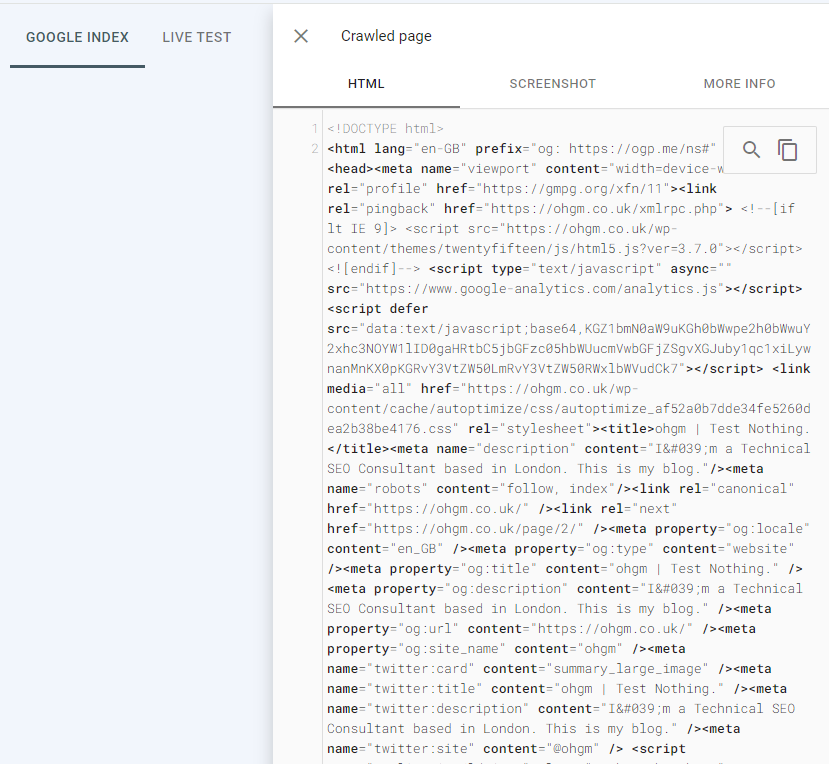

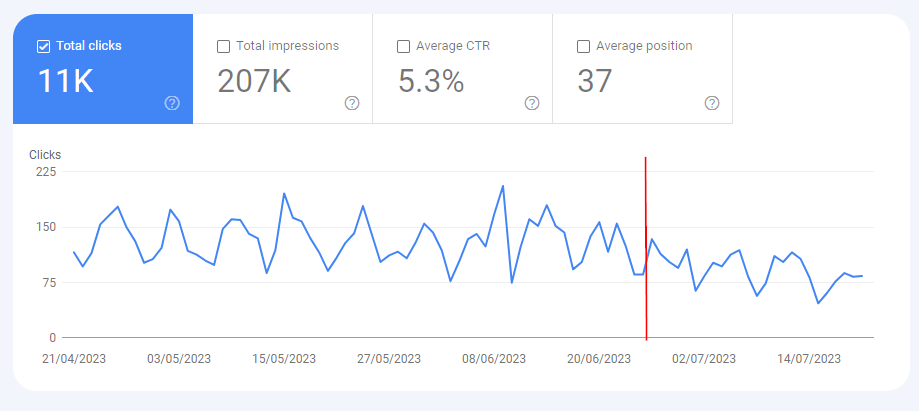

Here’s how things look before the test:

You cannot expect Google to rank a website which presents a duplicate No Admittance Except on Party Business sign on every page.

However, I’ve demonstrated that, at least at the start of this test, Googlebot is receiving substantially different content than users.

One of the things you should consider is whether this will continue. Is there an established best practice answer, or is that concept useless here? Here are some reasonable guesses:

- Google will, within one month, identify the very obvious cloaking. Once this happens, because the guidelines are obviously being broken, organic clicks / visibility will drop sharply to near-zero.

- Google doesn’t actually have any automated checks in place, this is just something people assume. The cloak works, so traffic will remain normal, with normal fluctuations.

- There are automated checks, but the experiment will end before the site gets caught. Nothing will happen, but it would if you left it running for 6 months.

- The cloak will hold, but traffic is going to drop. It will do so gradually rather than rapidly.

The fact that I’m writing this post probably tells you something interesting happened.

And What Did Happen?

Before starting the test I was thinking – if my traffic survives for the period, then it’s not been caught in any automatic cloaking detection.

~95% of my clicks from Google organic are going to a defunct post about viewing Linkedin Profiles without logging in. As you can guess, the sites traffic did not go to zero.

Here’s what it’s done instead:

How are we to understand this?

The decline is gradual and aligns neatly with the cloak being in place. There isn’t the sharp drop we might imagine from being caught falling foul of the guidelines.

If cloaking detection does exist, then this system would likely need to be fed more “suspect” content (e.g. porn, pills, or poker link farms) to trigger than what I’ve given it, or it runs very infrequently. Or it may be limited to websites above certain traffic/popularity thresholds.

;_;

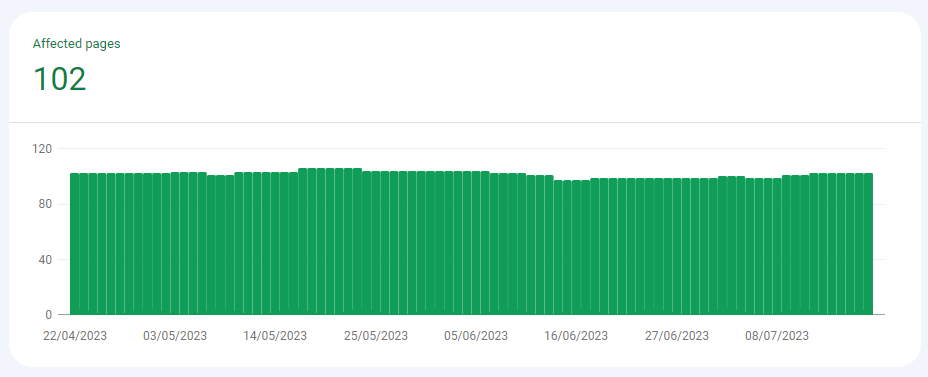

Anyway, indexing has remained steady.

So here’s my thinking. Firstly – I’m willing to accept that the timing of the decline could be coincidence. However, this makes for a dull post and I don’t really believe it.

Google employees have said several times that, one way or another, pogo-sticking (dwell time, bounce back to SERPs) isn’t a signal, or that it would be a very poor/unreliable/irresponsible signal for them to use. This has been the case for the 47 years I have been working as an SEO professional.

I’d be astonished if a trained algorithm wouldn’t make use of these signals (even “unintentionally”) if they’re being recorded. This has always been my discomfort with harder types of paywalls. I don’t think Google will have been successful in excluding this.

The % of users immediately performing a query refinement or clicking on another result will have dramatically increased for the month. I can imagine a pseudo metric like % of users doing this over period downweighing the URL, explaining the gradual decline.

Broadly the industry seems to agree with with “satisfying user intent” as being good for organic performance. This test attempts to tease out the how/why by doing the exact opposite.

My willingness to invite manual penalties on this domain at the slightest provocation has at least disturbed a replicable method. If you have the traffic and the will, then you too can present a terrible user experience to humans and test this for yourself.

So what’s next? I’m removing the ( ͡° ͜ʖ ͡°)╭∩╮just before publishing to allow you to read this.

And if it does recover, what could it be other than some pogo-sticking type of user-dissatisfaction metric playing into the rankings?

Thanks for reading!

Oh…

But they were, all of them, deceived, for there was another graph.

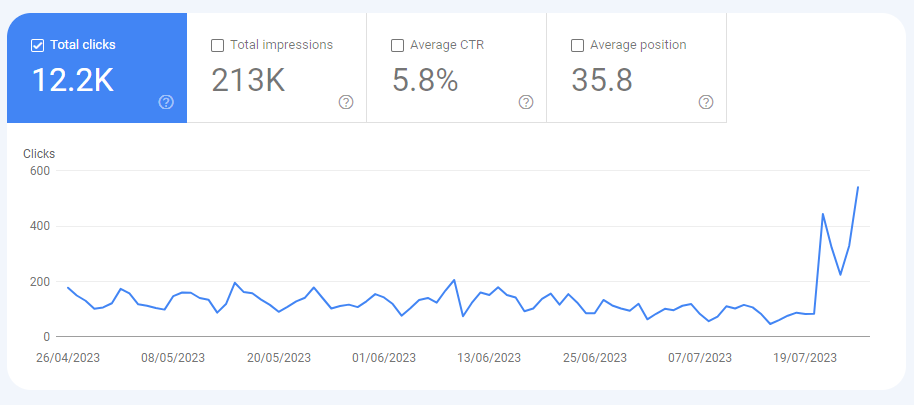

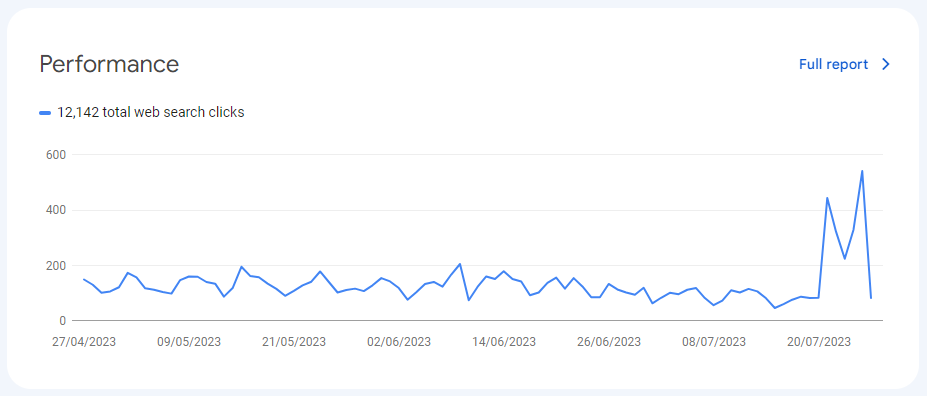

Unfortunately I don’t think it’s reasonable to describe this as a recovery, because it’s ascending to unreasonable levels just as I finish writing the post (such is life).

This is emphatically not the result of removing the cloak – it’s still running as I write this (but not as you’re reading it). And I think it’s unlikely the site’s been caught and rewarded. Given the timing, there are very good odds this was a Core Update.

The reason I think a lot of ranking shifts are dramatic around Core Updates is because user interaction signals are “emptied”, leaving mainly “traditional” signals (content and PageRank) for ranking to work with. I think there’s a case to suggest E-E-A-T signals do seem to get accounted for all at once as part of these.

Because my newsletter signup says "you can trust me i swear", I score 10/10 on each EEAT signal.

Part of the gradual shuffling in the SERPs following an update would then be the result of these signals being gathered (and “counting” once they come in). Something like this:

It’s possible some Core Updates might work the other way and “respect” gathered interaction signals all at once, or set some level for a “user interaction” score, but that’s unlikely to be the case here, as I have obliterated all human goodwill.

Despite this, the initial performance improvement was far beyond a return to “normal”, which would suggest a significant change in weighting elsewhere. I think trying to work out what this was is folly. What I think is nice is that the subsequent rapid plummet to Earth we’ve seen probably indicates, again, that human signals are counting for something. Except I’m going to remove the cloak now.

This is conjecture heavy, but I would appreciate alternate views on what would have explained this.

The end.

I’d like to thank Tom Capper for sense checking a couple of points, and I’d also like to thank the people in the SEO industry who asked if I was ok. Thanks.

While leaving this running for longer would be interesting, I’m someone who exchanges services for currency, and having an inaccessible website in quite a literal sense is something of an impediment.

I will probably update this post with another graph in the near future. I appreciate that this is a period of high volatility in the SERPs but would like to stress that, in this, the best of all possible worlds, traffic should not have gone up.

I didn’t read all of that?

Ok, sure:

“Build for users, not for bots“

You’ve probably seen variants of this phrase, and of course it’s correct, and of course I dislike it. If you want to build for users and not for bots, you can copy this experiment, swap the audiences, and see how it goes.

I’m being petulant – I know must people uttering these phrases don’t actually believe them, but like the saccharine shorthand. My discomfort is around how some people may take these as “you don’t need to think about the bot experience because search engines are smart enough“, which is not an uncommon attitude.

Alternatively:

You can 5x your clicks from Google by preventing your users from accessing your website (source: dream)

Gnash & Wail

Although it’s the first thing we might think to recommend with the recent change to user-agents in testing tools, you don’t have to customise for the testing tools if you don’t want to.

If someone wants to hide their cloaking behaviour, they will be serving the Googlebot UserAgent and IPs alongside an internal IP + UA combo, but studiously ignoring the test IPs and User-Agents.

Something I’ve not seen the industry mourning is the loss of a reliable way to see if anyone else is cloaking. Previously using the URL Inspection Tool via a 301 was the best way to do this, but now? Please let me know if you’ve solved this.

You are my favorite because you consistently melt my SEO mind.

I’m interested in finding out more about why you feel the spike was from a Core Update. [Also, you may want to not time things to coincide with the end of the month, which is when Google runs updates consistently every month]

Also, why not test this out with just one post rather than the whole site to see how that one page reacts?

Very interesting article !

Not so long ago, we used to stuff our gambling pages with mini games to increase time spent on the page, and the results were terrific in the SERPs.

About your last question to check if a page is cloaked, it seems to be impossible now.. The only thing I can think of is using google cloud as a proxy in order to get a real google IP and then modify the UA..

Its hard to say without referral data on the spike or ips from log files to determine if this is googlebot testing the site or related to the July 18 update. Typically, during updates these fluctuations are normal in the first 48 hours as the rollouts are tested in live environment and propagate across systems and serps are in flux.

¡Qué grande! jajjaja