SEOs moaning about site speed when they are supposed to be doing work is a staple of SEO Twitter. I’m not going to talk about about how to make sites faster, but about how to make them faster (apologies for this pun).

Fear, Greed, Envy, Shame

This post is a reworking of one of the parts of my presentation at ohgmcon4 on how to misuse the Chrome User Experience Report (CrUX) as plausible leverage to improve websites for users, and yourself:

The main purpose of an SEO Consultant is to drag graphs upwards in exchange for currency.

https://twitter.com/ohgm/status/1049307834347737088

I’ve seen Tom Capper speak very eloquently about this in the past, and I believe a hearty proportion of effective Agencies and Consultants have an amoral lean to getting things implemented.

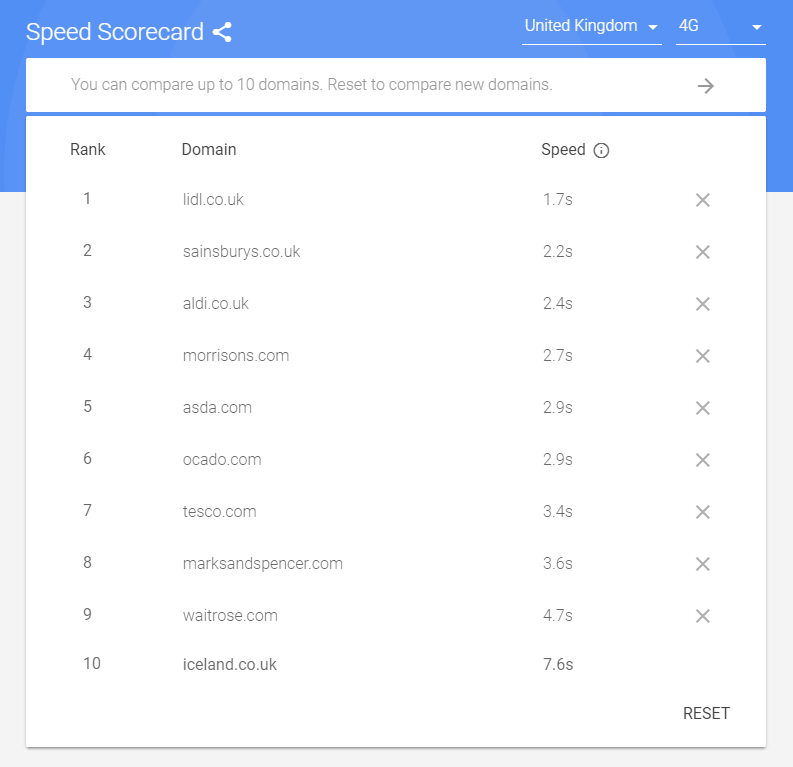

For site speed changes, the CrUX family makes this a joy to achieve. Let’s say you’re looking to explore this – we’re going to start gathering some data. The easiest first step is Google’s mobile site speed scorecard:

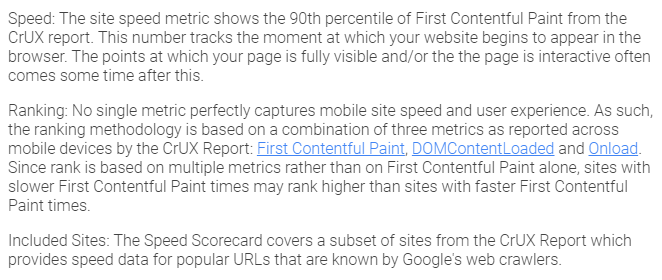

It’s free to use and shows up to 10 domains at once, ranked. Country and connection speed can be selected. Here is some explanation of the numbers you’re seeing:

Speed for this report is based on the 90th percentile of First Contentful Paint, while the Ranking is based on FCP, DCL and Onload. This report is fed by Popular URLs that are known by Google’s web crawlers.

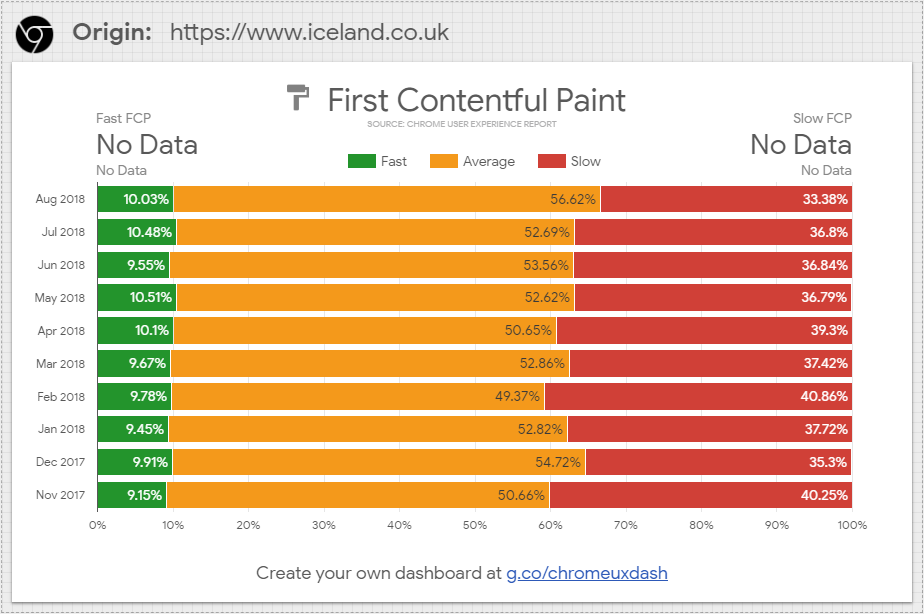

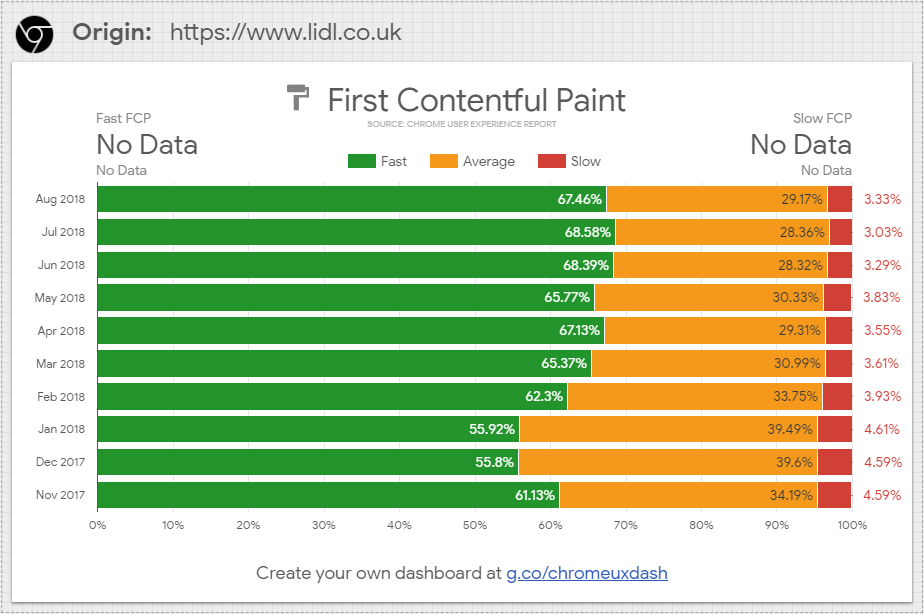

Next, this Data Studio report is so useful because it doesn’t cost any BigQuery credits so can be used for quick competitor comparisons and dashboards:

Competitor comparisons and comparisons over time are very easy to get and useful to flick between quickly to enrage:

First input delay is being experimented with in the CrUX report. This is the direction things are headed in (see update below).

These two cheap reports are great for getting buy-in to investigate further.

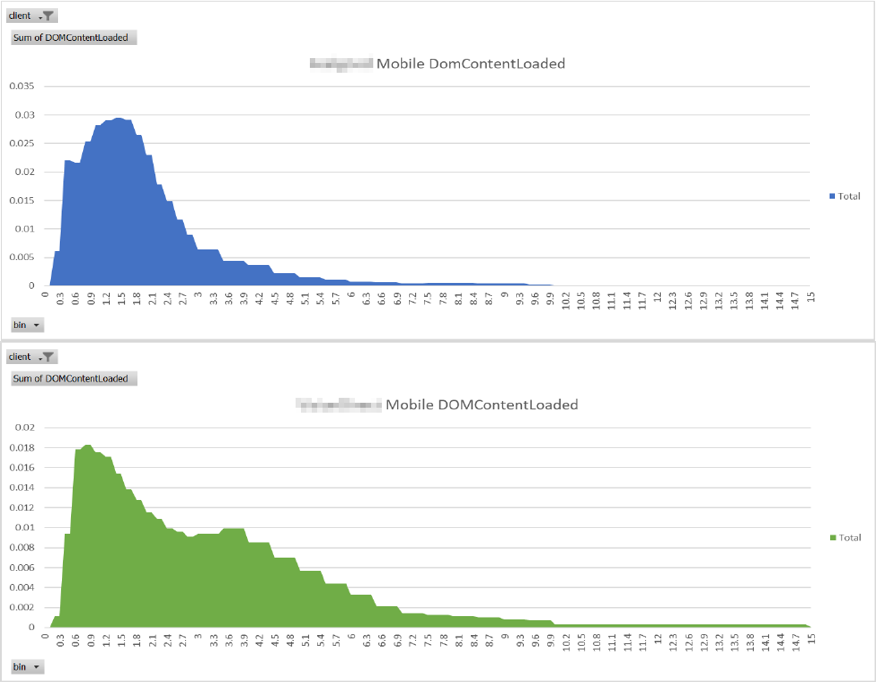

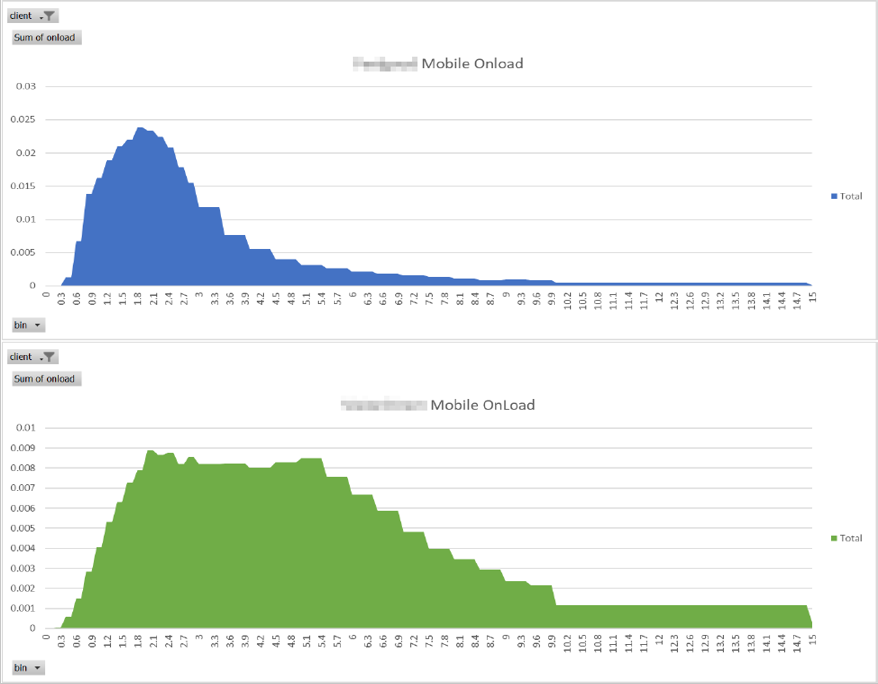

If this looks like a worthwhile use of your time, you can directly query the BigQuery CrUX report. The CrUX cookbook on github is great for this, as is Paul Calvano’s post. If I was to write about how to do this I would just be stealing their work. Go and read them and modify the example queries. Generate some comparative charts with a better (but realistic) competitor:

Presenting nicer versions (do as I say not as I do) of the above charts for each metric available achieves several useful things:

- Enrages your client and provides direction.

- Tells you where in the process your client’s site is slower, and for which users.

- Avoids the “it’s fast on my machine” problem by giving developers solid metrics to work with and a way of measuring month-to-month improvements.

Hand-waving a Theory

“Is this just showing clients their competitors and saying ‘look how much better they are doing than you!’?”

“Yes, with hand-waving.”

“Right.”

This is difficult to tease into a coherent narrative besides the above quote. Please refer to the header image. Most SEOs will agree with this oversimplified explanation:

- Google uses site speed as a ranking factor.

- Improving site speed will improve the ability of a given page to rank.

How exactly this plays out in reality isn’t so agreed upon:

https://twitter.com/ohgm/status/1039526751800578048

My own hunch is that it should be document level, but is probably an average for the domain. There are reasons why Google may prefer not to reward websites with lightning fast doorways if the remaining experience is a slog.

In most cases Google can’t even rely on crawler data to inform on how fast a given page is for actual users. Rendering from mountain view on a simulated Nexus 5 is not a fantastic indicator of how fast a website is for any given user. Chrome 41 and Googlebot are immune to some reasonably mainstream sitespeed techniques. Wheras CrUX data is based on:

“users who have opted-in to syncing their browsing history, have not set up a Sync passphrase, and have usage statistic reporting enabled”

As a thought experiment, how should Google treat site speed on a domain using Dynamic Rendering (read the getting started documentation)? They excessively acknowledge that crawler experience is going to be significantly slower than the user experience. Do you think they would prefer to use crawler data than the data Chrome Users are already sending them?

Speed as a ranking factor is designed to encourage faster websites. The reason behind this is primarily benevolent, to improve the real world user experience of the web (a foreseen outcome is making the web quicker to scrape). The messaging and reports we have access to mention both page level and site level information. This is the same elegant approach as with HTTPS-as-ranking-factor. We can expect Google to get increasingly pushy with this in the same manner (again, see update).

Top Tips for Waving Hands

If the graphs didn’t do it, variants of the following should help:

- Long term, Google would prefer to use user data than crawler data as a ranking factor.

- The CrUX report uses close to real world user data.

- The dataset is public and impartial (it doesn’t care). The dataset is updated monthly, so you can benchmark improvements against it.

- Google is already using this data to rank and score websites impartially (not for search, but elsewhere). It’s the best candidate for “Truth” available to us.

- Google could reasonably use some of this data as the speed ranking factors in organic search.

- By working on the site speed metrics in the report, you can measurably improve your score against commercial competitors and know that you are definitively ‘better than them’ on a speed benchmark Google are likely to use now, or in future to influence organic rankings.

and the gold standard of all site-speed-as-SEO work:

If I’m wrong conversion rate increases and we make more money.

Implications

There are some interesting niche recommendations you can make off the back of this:

- If CrUX data is used in rankings, we should emphasise optimisation features that benefit Chrome users (e.g. WebP)

- If CrUX data is used in rankings, HTTP/2 has a clearer benefit (even if Googlebot is not using it).

- If CruX data is used in rankings, all pages count, not just pages accessible to crawl.

It gets worse:

- If all pages count, then migrating slow sections of the website (think uncacheable pagetypes like checkout process) to third party domains might “solve” your speed problems from Google’s perspective.

(please don’t do this and blame me, but if you do it and it works, please praise me).

This general approach is helpful in getting developers onside – we can agree that a given site is pretty fast, but emphasise that these are changes to beat Google at their own game. This isn’t about blaming the people who you want to do things for you (see: SEO Twitter). It isn’t their fault.

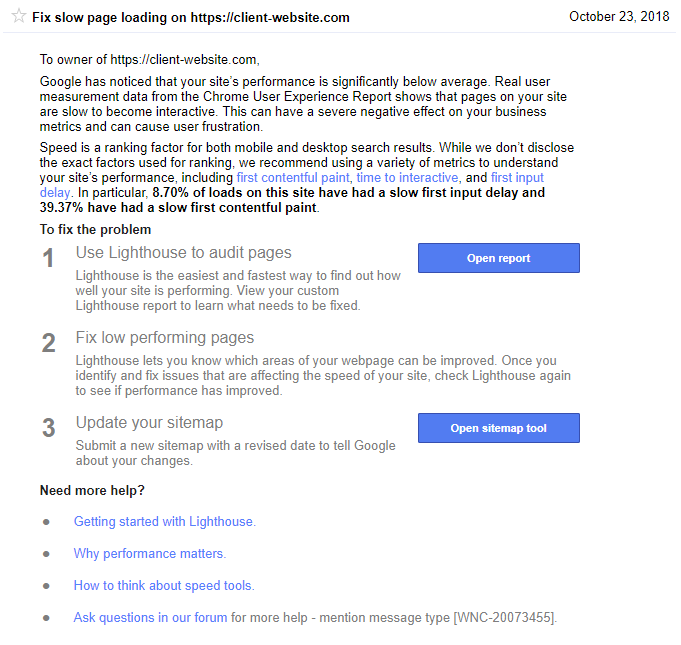

Update: CrUX in Search Console Messages

Since I wrote this piece and left it sitting in drafts for a month, a client received a warning for one of their websites. This is the first of these I’ve seen and thought it was worth sharing:

Fortunately, fixes are already in motion. Unfortunately, this is significantly better leverage than my conjecture was (though I now seem prophetic). Interestingly, I think it supports my hand-waving quite well – actual CrUX numbers are explicitly mentioned in Search Console warning messages.

Eagle-eyed readers will have noticed the use of XML sitemap dates to benchmark the changes. WHY.

If I’m reading this correctly, first input delay is already being measured for sites which haven’t opted in (in this instance, my client), but isn’t part of the accessible BigQuery dataset yet. To me this is a solid enough indicator that Google is mainlining Chrome user data for site speed judgements. Neat.

Regardless of this new information, there’s still the huge leap required to infer this is used for rankings. I’m not quite sure the truth matters here.

As always:

The main purpose of an SEO Consultant is to drag graphs upwards in exchange for currency.

and

It doesn’t matter how your site is, it matters how Google thinks it is.

Choose your graphs wisely.

Further Update on Search Console messages

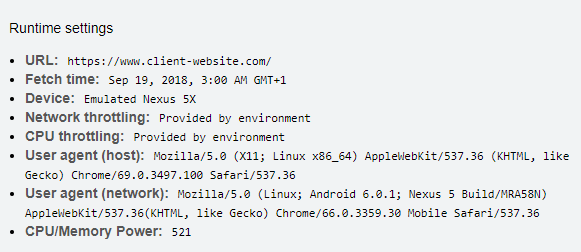

Clicking on the report takes you to an archived lighthouse report hosted on http://httparchive.webpagetest.org/:

From this we can gather:

- The lighthouse report being referred to in the search console warning is over a month stale.

- The report uses mobile (which you’d expect).

- The report was run at 3am, suggesting it’s scheduled.

- It wasn’t Googlebot asking to run the report, but a browser using the latest version of Chrome at the time.

This just gives me more questions. Comment below if you can shed some light on this.

Thanks to everyone at ohgmcon4 for sitting through the non-redacted version. ██ █████ ████ ████. Also Mark Cook for mentioning the idea the other day in a slack group. If he says “I’ve been saying something like this for a while”, he’s actually telling the truth.