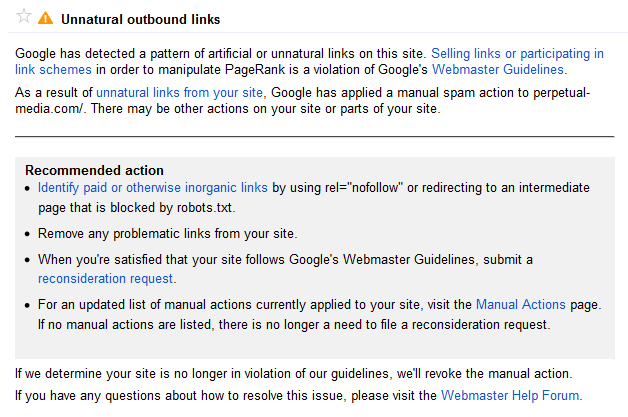

On March 20th, 2014 (hint), I received the following message for one of my sites:

Unnatural outbound links. Excellent. To be clear, this domain does have outbound links that were probably placed with the intent of manipulating PageRank. It’s irredeemably bad, a running joke. Google did the right thing penalising it. I’ve been waiting for this.

Unnatural outbound links. Excellent. To be clear, this domain does have outbound links that were probably placed with the intent of manipulating PageRank. It’s irredeemably bad, a running joke. Google did the right thing penalising it. I’ve been waiting for this.

Why?

Well, I’ve never seen an outbound link penalty before, and I’d like to see if I can shift the penalty using a technique that probably shouldn’t work.

My New Robots.txt

User-agent: * Disallow: *

This took a few minutes to implement. I think it’s to the letter of the law, but not quite the spirit – I’m skipping redirecting external links to blocked intermediate pages and am just blocking them for all useragents at source. As Googlebot shouldn’t be allowed to crawl the links, there’s no way they should be passing PageRank or manipulating the link graph in any way.

I’m satisfied that the site now conforms to Google’s Webmaster Guidelines (at least some of them)…

I received the ‘critical health warning’ indicating a couple of days later that Googlebot couldn’t access the site. Excellent.

My Reconsideration Request

I have made it so the links from my site no longer pass value to their destinations. Thanks.

If a human reads this, I hope they reject this reconsideration request. If a computer parses it, I hope they try to access each of the suspect pages in their list, fail to do so, and consider me repentant.

What do you think will happen?

[yop_poll id=”9″]

I’ll update this post as soon as I’ve had a response from Google. I really don’t believe this should pass review, but if it does (or doesn’t), I’ll share the response here.

Should the reconsideration be successful, I plan to, uh, remove the robot.txt directive and set a stopwatch by how long it takes for the penalty to come back. Anything for science.

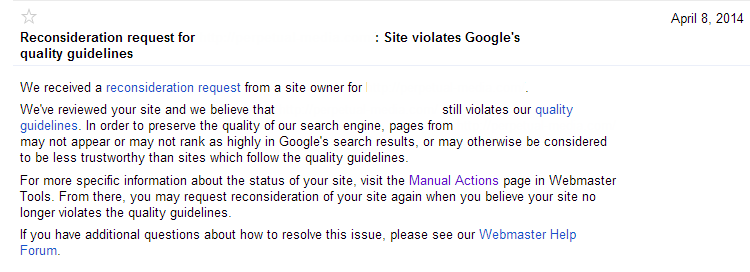

[Update] Their Response

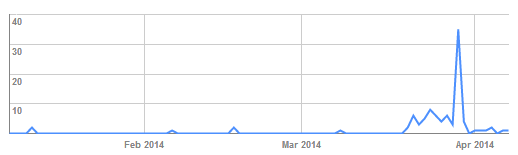

So that’s that – after a few weeks the reconsideration request was rejected. As a takeaway, you might not necessarily be able to recover from an unnatural outbound links penalty by blocking the site in robots.txt. Interestingly, Googlebot crawl increased after the robots.txt restrictions were put in place:

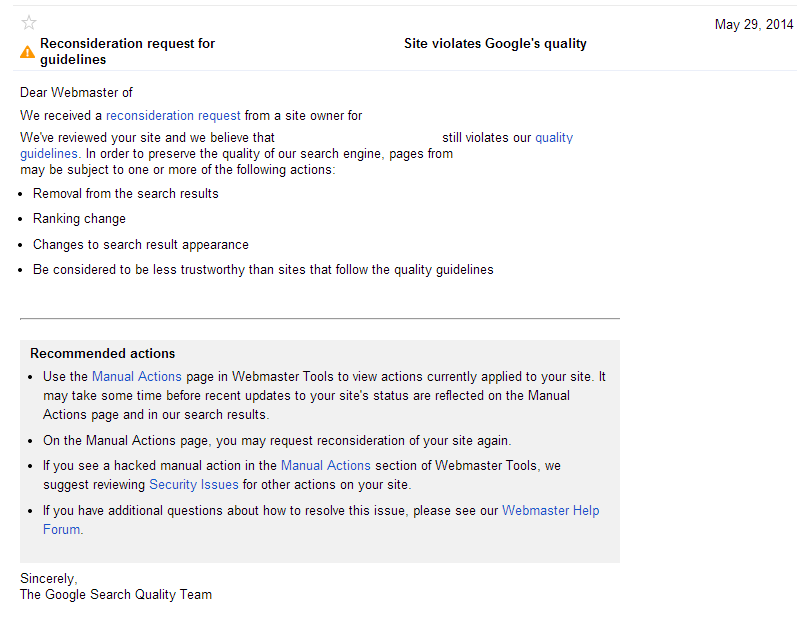

[Update] Their Second Response:

I sent the same reconsideration request, this time received another response:

This time they’ve let me know what to expect. Has anyone else seen this alternative response? Is anyone looking to buy a domain?

Interesting test, can’t wait to see what happens. I think it will be ignored.

Thanks for sharing this.

I recently recovered from just a penalty like this by installing a nofollow plugin on the site in question. 5 days later the penalty was lifted. I think your solution should have worked.