Website testing is one of those jobs I’m more interested in than SEO, but am too far gone to embrace a career change that would still keep me at a computer.

This isn’t a “testing is bad, actually” post, unless you are using it to avoid making decisions like in that declassified OSS field manual. We all have stories here.

The culture around running these tests can be tiring, but I would generally rather work with businesses that see the value in them than those that don’t (my favourite are those that skip testing in favour of doing what I suggest).

Anyway.

Why Might You Want To Cloak Your Tests?

When working with businesses that run web experiments, you will see some questionable tests. As an SEO, tests usually become your remit once you work out the process itself is negatively impacting performance in some way, or the business starts testing some feature that you’re 90% sure will.

Throughout this post I’m use “cloaking” quite loosely to mean “serving different content to bots”. By “cloak your tests” I mean “don’t show them to Googlebot” while they’re running.

I’ll discuss Google’s own definition shortly.

“Don’t show them to Googlebot” is my default setting, and the one I think testing tool platforms should also default to – unless you are doing something SearchPilot-ish, where your primary test subjects are the search engines.

Unfortunately, most testing companies are incentivised to say things like:

"Does {tool} harm SEO? No, this is a misconception - {tool} has zero impact on SEO".You do not really want to get into a back-and-forth with a customer success representative who will examine your concerns and eventually agree with them (but unfortunately, nothing can be done, our hands are tied, this is a feature of the platform we are looking to address at some point in the future unless we are bought out by one of three companies and our product becomes 5x more expensive because we’ve changed the game with A/I testing).

Because experiments are “just a test”, it’s very easy to tell ourselves that any potential downsides are limited, because they are only shown to x% of the audience, and only temporarily.

For the Search Engine crawler, it’s a near certainty that they won’t be assigned to a single audience, and will be exposed to most versions as they crawl.

Usually, I agree, this does not matter.

Depending on what types of content you're testing, it may not even matter much if Google crawls or indexes some of your content variations while you're testing. Small changes, such as the size, color, or placement of a button or image, or the text of your "call to action" ("Add to cart" vs. "Buy now!"), can have a surprising impact on users' interactions with your page, but often have little or no impact on that page's search result snippet or ranking.

https://developers.google.com/search/docs/crawling-indexing/website-testingMost types of test are not substantive enough changes to the page for you to stress about.

However, tests will typically be done by a different team who:

a) perform many tests, b) don’t understand SEO, and c) are not paid to give a single shit about rankings.

(I’m playing this up – most do understand that ‘traffic’ is one lever they can accidentally break)

But still, I recommend ruminating on the “it may not even matter much” in the above quote.

One week the team might decide to switch things up and start appending &test={ID} to internal links in the breadcrumb to make tracking easier (lol):

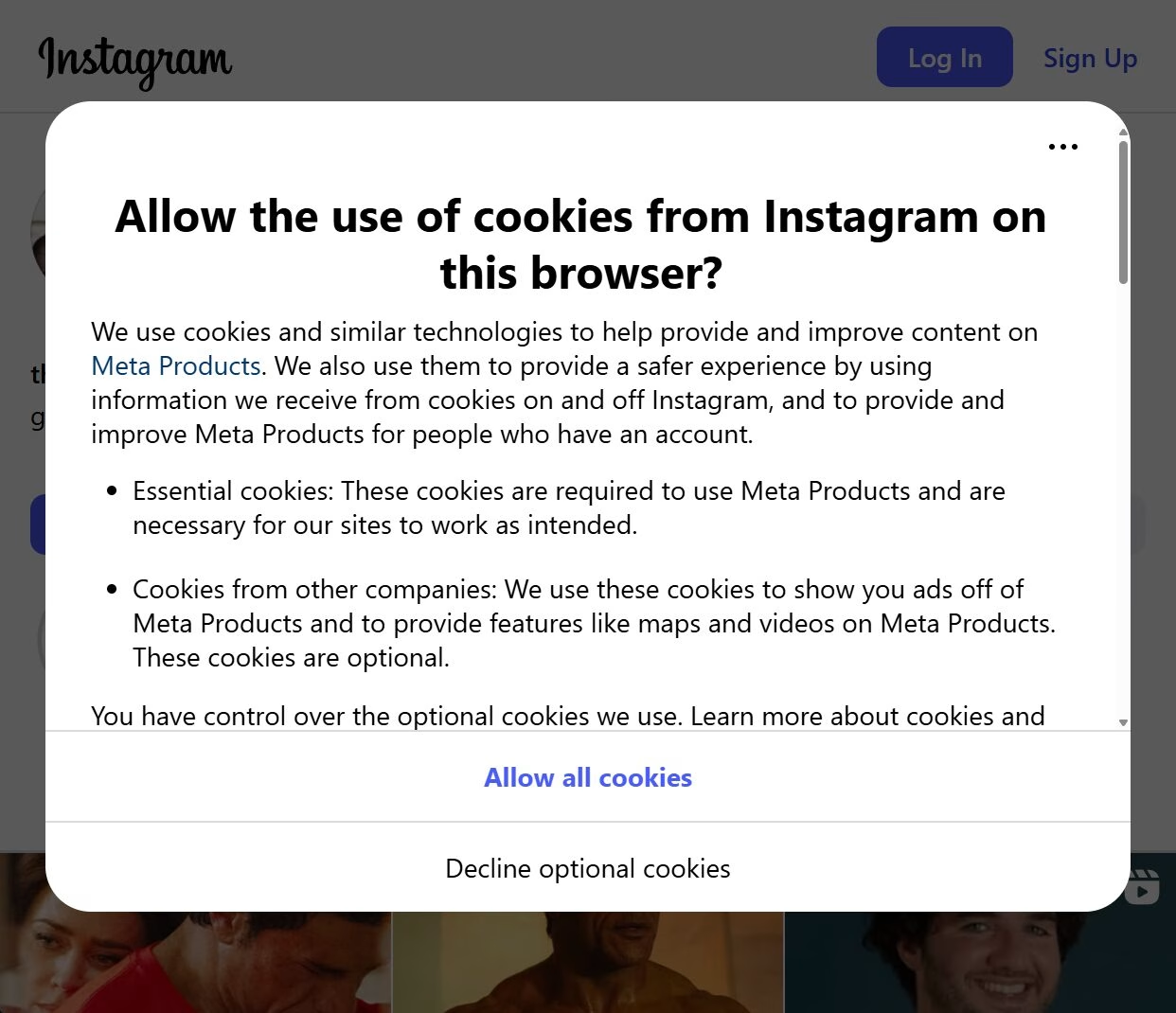

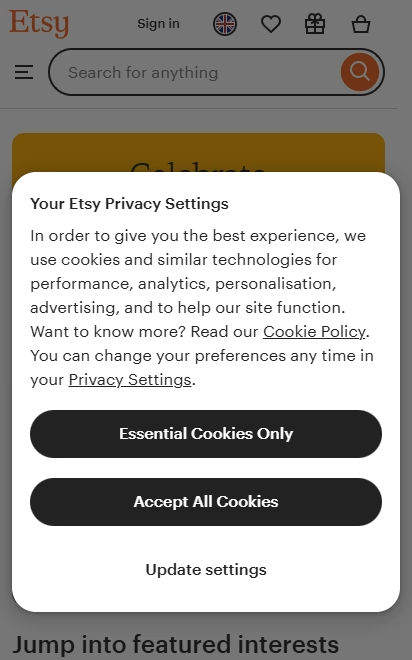

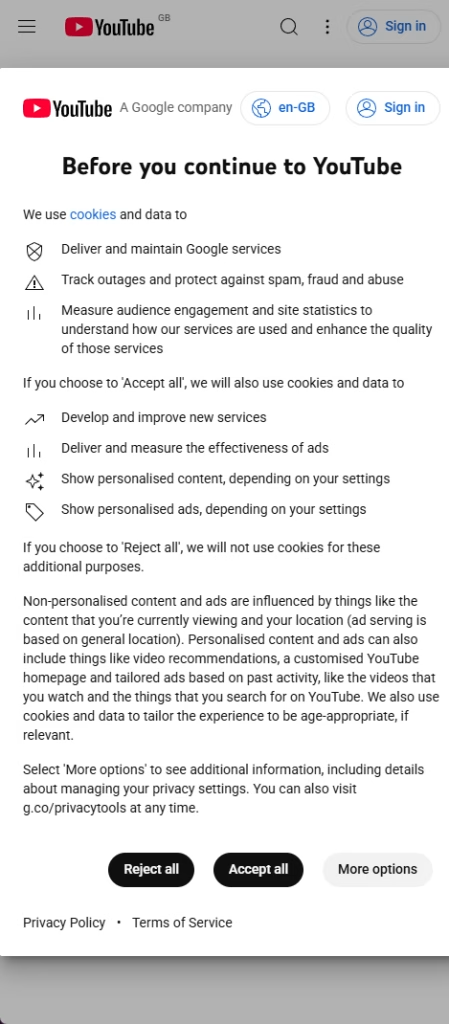

Or they might start testing cookie consent / email capture / competition popups which cover most of the viewport and grey out the background:

I like these cookie consent screenshots a lot, because like many mature websites they (correctly) do not serve those consent popups to Googlebot:

Anyway – while such test conditions are normal for human users to experience, the outcome may bring questions from your boss:

- Where are all these weird pages in Search Console coming from? Isn’t that supposed to be bad?

- Why are we slowly declining across these pagetypes?

- Web Vitals going crazy, please fix

Perhaps the test team are planning on moving the product description or above-the-fold content into a […] style lead-in?

Does the ‘read more’ CTA now load this content in via an interaction? Do the tabs?

“It works that way for the test but will be implemented differently if we decide to go for it. We will let you know when it’s live so you can check.“

Exhausting.

You might be thinking:

“but tests shouldn’t be using URL parameters…and we shouldn’t be serving full page popups which grey out the background, and they shouldn’t be implemented differently during the test. We don’t do that here. This is a real business and I’m respected.” etc etc

You’re probably right. Does the next person managing CRO tests on the site feel this in their bones? Maybe not.

Are you going to micromanage them? Really?

Are you going to catch when someone toggles the footgun switch in the UI that makes the test condition noindex, nofollow and intermittently drops some of your product pages?

Some of the tension between SEO and CRO is that removing features which traditionally support organic traffic can improve conversion rate, and adding features to support SEO can often worsen conversion rate. I’m not discussing that here – often a positive test will be the right decision for the business even if it has an expected downside for SEO.

One of the reasons I like excluding Googlebot from tests is that it gives you a better day one for any impact on organic performance vs gradual acclimation results of sometimes seeing the winner variant over the testing period (and then seeing it all the time without seeing the other variants). I’m aware this is not quite accurate.

In addition, if we crawl your site often enough to detect and index your experiment, we'll probably index the eventual updates you make to your site fairly quickly after you've concluded the experiment.

I don’t disagree with this either, but I’d rather have a firm date, thanks.

Cloaking tests as I’m fond of works both ways – by waiting until validation to ‘launch’, you delay the organic upside or downside. If it’s a good change for users, there’s a decent chance it’s a good change for SEO and you’ve just wasted time.

If you are able to be very involved with the testing program, this tradeoff may not be worth it to you.

Is It Difficult To Implement?

Excluding bots from your tests is not usually difficult technically or politically.

The people responsible for CRO likely do not give a shit about Googlebot’s experience. This tends to mean excluding Googlebot will be less of a struggle.

There’s also the carrot of ‘improved data’, since you are now excluding an audience that never gets out its credit card or clicks on things or enters their email address or shows exit intent etc.

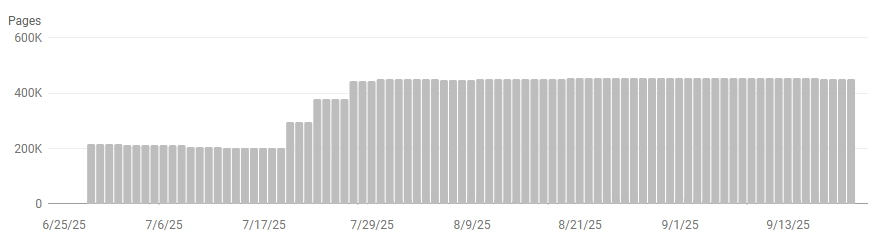

You’ll need to treat the day you make the change as a new day one for all running tests, or everyone will momentarily think they are a genius. I am reminded of how Search Console looks for most sites right now*.

(*Historical note – October 2025 clicks flat, impressions DOWN, CTR UP, Avg Rank UP – Google recently deprecated the feature allowing rank trackers to fetch 100 results per query, which was inflating impressions)

As an aside to any Google employees (hi), I recently worked with a site which ran a noindex, nofollow on test URLs for all UAs except for indexing crawlers (phew). This meant I got an adrenaline spike occasionally when using URL Inspection because “(compatible; Google-InspectionTool/1.0;)” got redirected to a test, showing that the homepage was going to drop out of the index.

While I know this UA should have been included, this is exactly the sort of scenario where showing the behaviour of redirects in the URL Inspection Tool’s interface (so I could see we were not still on the homepage URL) would save some guesswork.

Please?

But Google Explicitly Says You Shouldn’t Cloak Your Tests

The documentation is quite clear that my preference is frowned upon, and compared to other documentation, the snippet regarding cloaking on the testing page is harshly worded:

Don't show one set of URLs to Googlebot, and a different set to humans. This is called cloaking, and is against our spam policies, whether you're running a test or not. Remember that infringing our spam policies can get your site demoted or removed from Google search results—probably not the desired outcome of your test.

Cloaking counts whether you do it by server logic or by robots.txt, or any other method. Instead, use links or redirects as described next.

Really frowned upon.

So why I do I persist in recommending something which will only bring ruin?

Am I malicious or stupid? (yes)

Most Discussions About Cloaking Fall Apart

(or “why this is a blog post, and not a discussion”)

From Google’s end we see the following kinds of statement:

- Google: Cloaking is {definition}

- Google: {technique which meets part of the definition} is Cloaking.

- Google: We do not consider {technique which meets the same part of the {definition}} to be Cloaking.

We’re in “I know it when I see it” territory.

With the added complication:

- People (me) tend to use a slightly different definition for talking about Cloaking colloquially than Google’s own {definition}.

So, How Does Google Define Cloaking?

We get a neat one sentence definition from the spam policies page, with some examples:

Cloaking refers to the practice of presenting different content to users and search engines with the intent to manipulate search rankings and mislead users.

Examples of cloaking include:

Showing a page about travel destinations to search engines while showing a page about discount drugs to users

Inserting text or keywords into a page only when the user agent that is requesting the page is a search engine, not a human visitor

https://developers.google.com/search/docs/essentials/spam-policies#cloaking

I think we’d agree that pharma switcheroos and text stuffing are unambiguous examples of nefarious and deceptive cloaking.

For now, let’s focus on that definition:

Cloaking refers to the practice of presenting different content to users and search engines with the intent to manipulate search rankings and mislead users.

The ambiguity comes down to the use of intent. It’s one thing for creating rules for a legal system, or for you to judge my behaviour, but a questionable yardstick for something working algorithmically over text.

I’m not saying a Mechanical Turk approach would be fix this, I’m saying that accurately reading a mental state from some HTML with any certainty will be difficult.

But the ambiguity is part of the point – spam policies are meant to discourage bad behaviour, not accurately inform would-be spammers (that is, you and I) of the boundaries of play. The goal is for most people to adopt over-compliance “to be on the safe side”.

That said, I think this definition is actually remarkably weak (if taken exactly as written). Three things have to simultaneously hold true:

1. Cloaking refers to the practice of presenting different content to users and search engines

2. with the intent to manipulate search rankings

3. and mislead users.

The second ‘and‘ means you have to also intend to mislead users (not just the search engines). You have to meet all clauses for it to be Cloaking by Google™.

You might have seen the earlier YouTube example and thought I’d included it as a hypocrisy jab. Not so! I think we can agree this has not been done with any intent to manipulate search rankings (this would be quite an indirect approach for them to take), or mislead users.

I think we should be lots more of that sort of (1.)-only cloaking (this is the colloquial use I mentioned earlier).

WARNINGWARNINGWARNINGWARNINGWARNING

While I also believe Google’s actual enforcement of cloaking is very weak, I also believe it’s stronger than their given three clause definition.

WARNINGWARNINGWARNINGWARNINGWARNING

What I Reckon The Rules Are

Something that comes up a lot in nerd communities (hi) when they’re being especially tiresome is distinguishing Rules-as-Intended vs Rules-as-Written.

Think of it as a personality test for how well you can cope with ambiguity.

I think that in practice (that is, enforcement), the definition is best read in a stricter form:

Cloaking refers to the practice of presenting different content to users and search engines where we think the intent is to manipulate search rankings and/or mislead users.

“where we think the intent is to” is the evergreen reminder that the actual truth of the matter (e.g. your intent) does not matter.

You are not the judge. You have no say. Good luck with any appeals.

For example:

Cloaking counts whether you do it by server logic or by robots.txt, or any other method. [...]

If you're using cookies to control the test, keep in mind that Googlebot generally doesn't support cookies. This means it will only see the content version that's accessible to users with browsers that don't accept cookies.

-https://developers.google.com/search/docs/crawling-indexing/website-testing#dont-cloak-your-test-pages

Although there has been a conscious decision for Googlebot to have an abnormal approach to cookie acceptance/storage, any fallout from this (you are breaking our guidelines by cloaking) would be your problem, not theirs.

“That Sign Can’t Stop Me Because I Can’t Read“

As long as Google’s a source of quality traffic, Googlebot is a privileged user that needs help, even if it consistently makes mistakes none of your other users are able to (or am I talking about chatbot crawlers?)

If your site uses technologies that search engines have difficulty accessing, like JavaScript or images, see our recommendations for making that content accessible to search engines and users without cloaking.

- https://developers.google.com/search/docs/essentials/spam-policies#cloaking

If your site uses new fangled technology like JavaScript or images, you might just be cloaking.

“That Sign Can’t Stop Me Because I Can’t Read“

AND/OR

Regarding my “and/or mislead users” suggestion – I think the actual definition is too weak here – most scenarios in which we want to manipulate search engine rankings don’t involve intending to mislead users, but a scenario where we intend to mislead users by presenting different content to search engines would probably be a nefarious one which might as well be fall under the cloaking policy.

Hypothetical – a medical information site is hacked. Being pure YMYL, it already ranks well (#1) for erectile dysfunction keywords with in-depth informational content. The hacker replaces it with a golden era squeeze page and their own checkout for as many types of dick pills as you can imagine.

The search engine is being misled (it wouldn’t ordinarily rank the squeeze page), and the user has probably been misled by the SERP snippet. But the hacker is relying on the existing ‘erectile dysfunction’ ranking. Their approach relies on preservation, not manipulating the search ranking.

This is materially different from the earlier example:

Showing a page about travel destinations to search engines while showing a page about discount drugs to users

Our hacker is at least a good enough marketer to know that people looking for information about erectile health are a more receptive audience than people researching a holiday. I think the and/or strengthens the definition.

I think as written, Google’s own cloaking definition would not consistently catch their own second example of cloaking:

Inserting text or keywords into a page only when the user agent that is requesting the page is a search engine, not a human visitor

(this wouldn’t necessarily fail 3 – “and mislead users”)

This is an obscenely long post for you to skim through, so I’d like to jump back to an earlier point – how Google communicates disapproval about cloaking.

###########

- Google: {technique which meets part of the definition} is Cloaking.

- Google: We do not consider {technique which meets the same part of the {definition}} to be Cloaking.

###########

{technique which meets part of the {definition}} is Cloaking

No surprises here.

Don't show one set of URLs to Googlebot, and a different set to humans. This is called cloaking, and is against our spam policies, whether you're running a test or not. Remember that infringing our spam policies can get your site demoted or removed from Google search results—probably not the desired outcome of your test.

Cloaking counts whether you do it by server logic or by robots.txt, or any other method. Instead, use links or redirects as described next.

If you're using cookies to control the test, keep in mind that Googlebot generally doesn't support cookies. This means it will only see the content version that's accessible to users with browsers that don't accept cookies.

This might be a reading comprehension failure on my part – but I don’t think this meets their definition.

Perhaps they are fine with the type of cloaking I endorse (serving different HTML on the same URL), but this text very much reads like any approach serving up different content would be Cloaking™.

I think “not showing Googlebot A/B tests” can easily be achieved without holding an intent to mislead users (3), and potentially done without the intent to manipulate search engine rankings (2).

By their written definition, I think this should get a pass, but in practice I think we’re better off with that and/or I mentioned.

Sometimes it’s cloaking (ok), and sometimes it’s cloaking (bad).

We do not consider {technique which meets the same part of the {definition}} to be Cloaking

Dynamic Rendering

(while this approach is “deprecated”, the messaging is very clear)

Dynamic rendering is not cloaking

Googlebot generally doesn't consider dynamic rendering as cloaking. As long as your dynamic rendering produces similar content, Googlebot won't view dynamic rendering as cloaking.

When you're setting up dynamic rendering, your site may produce error pages. Googlebot doesn't consider these error pages as cloaking and treats the error as any other error page.

Using dynamic rendering to serve completely different content to users and crawlers can be considered cloaking. For example, a website that serves a page about cats to users and a page about dogs to crawlers is cloaking.

https://developers.google.com/search/docs/crawling-indexing/javascript/dynamic-rendering#cloaking

This is the first clause – what I’m calling the colloquial definition:

“Cloaking refers to the practice of presenting different content to users and search engines“

When bots and users request a URL, they are provided with substantially different responses, but if it’s set up correctly Googlebot gets a baked cake, while users get butter, eggs, flour, sugar etc.

Here the similarity to the end product is the (sensible) threshold by which you are judged. This also fits that intent clause doing it’s job.

(just how non-compliance gets assessed in normal scenarios is somewhat baffling – prerendering is usually being used as a workaround because WRS isn’t experiencing the site the same as everyone else, often because Googlebot is being idiosyncratic and skipping resources. Again – their decision becomes your issue.)

I don’t have a problem with this not being Cloaking (according to Google™).

It’s beneficial to all parties and clearly does not meet their own definition.

Where I do see a problem…

Paywalls / Loginwalls

With paywalled content, bots and users get a materially different experience. In most cases it echoes an intrusive interstitial, only… more intrusive.

However:

If you operate a paywall or a content-gating mechanism, we don't consider this to be cloaking if Google can see the full content of what's behind the paywall just like any person who has access to the gated material and if you follow our Flexible Sampling general guidance.

The flexible sampling guidance is very gentle:

There is no single value for optimal sampling across different businesses. However, for most daily news publishers, we expect the value to fall between 6 and 10 articles per user per month. We think most publishers will find a number in that range that preserves a good user experience for new potential subscribers while driving conversion opportunities among the most engaged users.

This reads like they don’t want to tell publishers what to do (it’s a delicate relationship with a lot of litigation).

Regarding flexible sampling – I’ve not seen a site penalized for setting monthly samples to zero. Perhaps I am inexperienced here – please let me know if you have and I’m being naïve and my views need adjusting.

Did You Know? Rankings tend to improve when you start giving Googlebot access to everything rather than the 50 word snippet humans are getting (i.e. exclude it from any metering). Amazing!

As a reminder:

1. Cloaking refers to the practice of presenting different content to users and search engines

2. with the intent to manipulate search rankings

3. and mislead users.

In most cases, I think paywall implementations succeed in providing 1 and 2, with Google assisting to supply a healthy dose of 3. But somehow this is not Cloaking™.

If we metered (or treated) Googlebot like a regular user, we know we would rank badly, so we don’t do this. When we (I) let Googlebot through a paywall, it is only because our (my) intention is for the paywalled content to rank higher.

I don’t know how you could internally sell the work for making an exception for search engine crawlers without making a case about organic search. How could the business care?

“Yes, you see we’re going to do this thing which we expect to result in increased traffic, revenue, subscriptions etc via the organic channel, but this is a foreseen unfortunate consequence rather than the intention of our action, which we’re doing for some other serious business reason”.

I think it is Cloaking™ by all earlier definitions, except Google communicates that it doesn’t consider it to be.

As a search professional, I’m glad it’s an option, but as a user of Google’s search engine, it is a dog shit experience to be hit with a paywall from the SERP the first time you visit a site.

GIVEN I am a human user of Google Search

WHEN I click on a result and immediately hit a paywall

THEN The content is not what I expected, so I feel misled

On the face of it, this seems like a loophole in the algo. Perhaps those quick bounce backs to the SERP are forgiven as they are yet another chance to “shake the cushions” (for one last ad).

Does the site intend to mislead users when it presents them a paywall (“you thought you were getting the content you clicked on from the SERP? lol”)?

No… it’s just another easily foreseen but not intended unfortunate consequence of their intentionally intended actions (made with intent).

I would say Google Search bears responsibility for some of the misleading by ranking such content, rather than solely the content provider themselves. But again, I think this is the easily foreseeable outcome, rather than their intent (ok, enough already, I’ll stop).

A recent addition to the JavaScript guidance is interesting:

If you're using a JavaScript-based paywall, consider the implementation.

Some JavaScript paywall solutions include the full content in the server response, then use JavaScript to hide it until subscription status is confirmed. This isn't a reliable way to limit access to the content. Make sure your paywall only provides the full content once the subscription status is confirmed.

https://developers.google.com/search/docs/crawling-indexing/javascript/fix-search-javascript#paywall

Regarding the last sentence, this is good advice in the context of “do I want people to easily bypass my paywall?” and/or “do I want LLM crawlers to ingest my paid content for free and serve it up to their users?“.

In the context of “I want my pages to rank far, far better than they deserve to in Google Search“, I suspect it’s not as helpful.

Could you use Paywall Structured data to do some form of the ‘bad’ type of cloaking? Perhaps:

"hasPart":

{

"@type": "WebPageElement",

"isAccessibleForFree": false,

"cssSelector" : ".cloakedcontentweshowtoyoubutnothumans"

}In this scenario, the paywall messaging is a squeeze page for pills (flexible sampling rate: zero).

We charge humans $1B/s to access the true comprehensive content about erectile dysfunction.

We're ok because "Google can see the full content of what's behind the paywall just like any person who has access to the gated material"

This is somehow not Cloaking™? Ridiculous.

In the past I was hesitant to implement any sort of legitimate paywall structured data, because I believed it was essentially snitching on yourself.

It makes it explicit that “all this juicy content? Yeah they usually don’t see it“.

I thought it would be more than sensible for a search engine to devalue pages that do this. I don’t have access to the algo. Maybe, uh, maybe they do rank it appropriately, or maybe they believe that the invisible hand of user-interaction signals will rank the content where it deserves to be.

As another aside, have you seen how many high performing sites are hijacking the back button now? (especially if you come in via Google Discover)

Tiresome.

I know this was a tangent, but as a user I think for most human users paywalls and content-gating should be downweighed more than it currently is.

Platform Decisions

Do you have any sites or clients on Shopify?

Shopify is currently “cloaking” most paginated collections on Shopify sites to hide a URL parameter which lets pages load faster for users: (https://community.shopify.dev/t/a-new-phcursor-pagination-parameter/17715/22)

Is this the right decision? Yes.

Is this cloaking by Google’s definition? No.

There’s no intent here to mislead users.

- Would it benefit Google to crawl any of these other URLs? – No.

- Are they indexing them anyway? – Yes.

- Are Google going to do any enforcement here? – No.

We might worry because it is literally different URLs like they say is Cloaking™ with A/B testing (!), but the content really doesn’t change.

Incidentally, whatever Shopify’s doing, it doesn’t seem to have worked- https://www.google.com/search?q=inurl:phcursor (at least, it was messed up when I published this post).

There are a couple of explanations that spring to mind:

- Shopify’s detection/serving isn’t good enough (or wasn’t when they launched).

- Googlebot is avoiding detection on a much larger scale than we’ve previously seen.

I think the latter is unlikely. I suggest looking at the crawl data you have for any sites on Shopify to put your mind at rest.

Leaving aside “is fixing this a worthwhile change?” – I’m saying that I would not be worried about getting more aggressive here to ensure Googlebot is not being served the phcursor parameter.

My risk tolerance is similar r.e. Cloaking tests.

Why You Should Not Cloak Your Tests

“I’m a big baby who won’t risk a catastrophic result for my clients in the name of micro optimisations, waa”

Grow up?

My general advice would be to keep Google’s literal written definition in mind when considering how risky your ‘cloaking’ is.

It is much less restrictive than the common usage of the term, especially if you are not doing shitty things.

In addition, please don’t do shitty things.

I would also keep in mind the de facto probably intended and/or version of the definition.

Disclaimer: When I said you should cloak your tests 100% yes, great idea – it was a prank just kidding ha ha tricked you.

(this site always has some minor cloaking going on so I am figuratively, literally, and narratively asking to be struck down with this post)

I'd love it if you could share stories of sites you've seen get in trouble for serving different content to bots and users in ways you consider "innocent".

I haven't seen it happen yet.

Personalisation is meant to be the future of the web, right?

Great article as ever and it had me ranting to myself which is a good sign (against Google, not you). I don’t want to sound like a Search Engine Roundtable commenter but it does feel like “one rule for them and one rule for us” when it comes to documentation definitions and implementation and I agree that paywalled content should be treated differently given the intent behind paywalling and the content being different (full vs. truncated).

Excellent way to slip dick pills into a detailed treatise on the implied semantics of intent.

Just anecdotally: at the brand I work at currently, we slavishly obeyed Google’s instructions on split-testing when I joined in 2019, only to see our rankings tank because Google seemed to get very confused at having seen two different sets of content being returned at the same URL. So we’ve excluded Googlebot (shock!) from all a/b tests since then without any issues.

It’s a big site, nearly a billion organic sessions a year. And we probably run a few hundred a/b test per year. Haven’t been caught with our pants down once.