I’ve been playing around with Sift this weekend as a potentially friendly and faster alternative to Grep (not that grep is slow). Although the tool clearly has broader applications than parsing server logs, it’s very suited to that purpose. If you’re currently using Grep for this purpose, I’d recommend checking Sift out.

srcset on WordPress

I’m interested in speeding up WordPress. If you read around on the topic, you’ll find lots of advice on the bigger performance gains (enable server side caching, enable browser caching etc). I’m also interested in theoretical speed gains, too (here’s that post on saving GIFs at a 90 degree rotation and then rotating them back with CSS to save thousands of bytes).

Most sites are viewed on a number of different resolutions. Typically users on mobile devices will be forced to download all the desktop assets, which are then wrapped around a mobile theme. If the website is also trying to satisfy ‘retina display’ users, then sometimes they’ll be forcing images two or three times larger than necessary for the majority of users. This isn’t a great approach; often mobile users with the worst connections, smallest screens and weakest processors will have the most frustrating experience by design. I believe srcset can in part alleviate (not solve) this issue, and offer marginal speed gains for smaller screen resolutions.

Enter srcset

So, what is srcset?

Open this page, resize your browser and reload it. That’s srcset.

Srcset presents browsers with options for an image (“a1.jpg is 200px wide, a2.jpg is 400px wide, a3.jpg is 800px wide”). Browsers can then choose which image they should load based on what they know about the user (viewport width, screen resolution, orientation, connection speed, astrology). You can read more about it from Jake Archibald, here. It’s very clever.

Most importantly, it’s easy to set up on WordPress.

Srcset on WordPress

First I set up my srcset test page. It’s got pictures of dinosaurs and it weighs about 6MB thanks to the “Full-Size” image upload option.

Then, I install the Responsive Images plugin by RICG. This works (roughly) by using the “small, medium, large” copies of images WordPress generates as alternates for browsers to choose through the srcset attribute. Activating the plugin takes us from this:

<img class="aligncenter size-full wp-image-1257" src="https://ohgm.co.uk/wp-content/uploads/2015/10/dinoriders.jpg" alt="dinoriders" width="2880" height="1800" />

To this:

<img class="aligncenter size-full wp-image-1257" src="https://ohgm.co.uk/wp-content/uploads/2015/10/dinoriders.jpg" alt="dinoriders" width="2880" height="1800" srcset="https://ohgm.co.uk/wp-content/uploads/2015/10/dinoriders-300x188.jpg 300w, https://ohgm.co.uk/wp-content/uploads/2015/10/dinoriders-1024x640.jpg 1024w, https://ohgm.co.uk/wp-content/uploads/2015/10/dinoriders-825x510.jpg 825w, https://ohgm.co.uk/wp-content/uploads/2015/10/dinoriders.jpg 2880w" sizes="(max-width: 2880px) 100vw, 2880px" />

Speeding Up Default WordPress

This post is an experiment in getting a Vanilla WordPress installation as fast as possible. Why? – Speed is Good.

Update: You can read part two on images here.

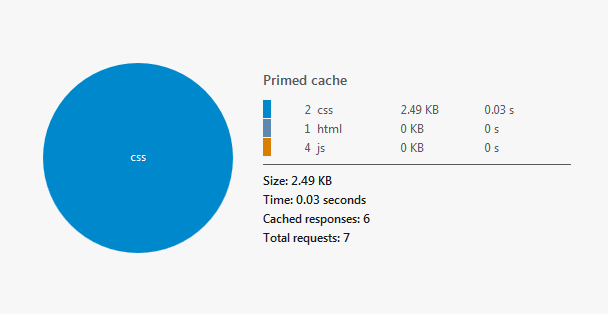

A new WordPress install was set up on a test domain. The 2015 theme was activated, and all plugins were uninstalled. This is what we’re benchmarking:

You’ll note that there really isn’t anything to it at the moment. This is intentional – if we can get this as fast as possible, then we have a foundation to work from as the site grows.

Filter Server Logs to Googlebot

Googlebot is one of the most impersonated bots around. Scraping tools will often use this particular user-agent to bypass restrictions, as not many webmasters want to impede Googlebot’s crawl behaviour.

When you’re doing server log analysis for SEO purposes, you might mistake a scraping tool’s ‘crawl everything’ behaviour for Googlebot behaving erratically and ‘wasting crawl budget’. It’s important that you don’t solely use the reported user-agent string for any analysis you might be conducting, but instead combine this with other information to confirm what you are seeing is actually Googlebot (and not you auditing the site with Screaming Frog).

In this post I’d like to offer snippets of code you can play around with to achieve this. You probably shouldn’t just copy and paste code from the internet into your command line.

If your analysis is less than a million rows, it might be easier persisting with Excel, but you’ll miss out on the authentication magic (unless you’re willing to try this). The section below is recommended if you want to filter an unwieldy data set down to a manageable size.

Filtering Server Logs to Googlebot User Agent

First you’ll want to filter down to every request claiming to be Googlebot. This removes legitimate user traffic and cuts down on the number of lookups you have to make later. You need this information for your analysis, anyway.

We’ll be using grep, which is a default utility in OSX and Linux distributions. Other utilities like awk or Sift will also do the job quickly. They’re both significantly faster than Excel for this task.

If you’re on Windows, get GOW (GNU’s Not UNIX On Windows?) to give you access to some of the commonly available tools on Linux distributions and OSX (alternately you can use Cygwin). You’ll need to navigate to the folder containing the files you wish to search and open a terminal window. Once GOW is installed, Windows users can hold CRTL+SHIFT and right click into the folder containing the file/s you wish to analyse, allowing you to open a command window.

Grep uses the following format:

grep options pattern input_file_names

If you’re stuck at any point you can type the following into the command line:

grep --help

Right now, we don’t need any of the optional flags enabled:

grep "Googlebot" *.log >> output.log

This will append each line containing ‘Googlebot‘ from the file/s specified into a file in the same folder called output.log. In this case, it would search each of the ‘.log’ files in the current folder (‘*’ is useful if you’re working with a large number of server log files). Under Windows the file extensions can be hidden by default, but the ‘ls‘ command will reveal their True Names. The double-quote characters are optional delimeters, but are good practice as they work on Mac, Windows, and Linux.

If you just wanted to filter a large dataset down to the Googlebot useragent, you can stop here.

Filtering Logfiles to Googlebot’s IP

You’ll now have a single file containing all log entries claiming to be Googlebot. Typically SEO’s would then validate against an IP range using regex. The following ranges are from chceme.info:

From To 64.233.160.0 64.233.191.255 WHOIS 66.102.0.0 66.102.15.255 WHOIS 66.249.64.0 66.249.95.255 WHOIS 72.14.192.0 72.14.255.255 WHOIS 74.125.0.0 74.125.255.255 WHOIS 209.85.128.0 209.85.255.255 WHOIS 216.239.32.0 216.239.63.255 WHOIS

We can use this information to parse our server logs with a hideous regular expression:

((\b(64)\.233\.(1([6-8][0-9]|9[0-1])))|(\b(66)\.102\.([0-9]|1[0-5]))|(\b(66)\.249\.(6[4-9]|[7-8][0-9]|9[0-5]))|(\b(72)\.14\.(1(9[2-9])|2([0-4][0-9]|5[0-5])))|(\b(74)\.125\.(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?))|(209\.85\.(1(2[8-9]|[3-9][0-9])|2([0-4][0-9]|5[0-5])))|(216\.239\.(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)))\.(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)

Could Rotating GIFs Improve Performance?

GIFs are funny.

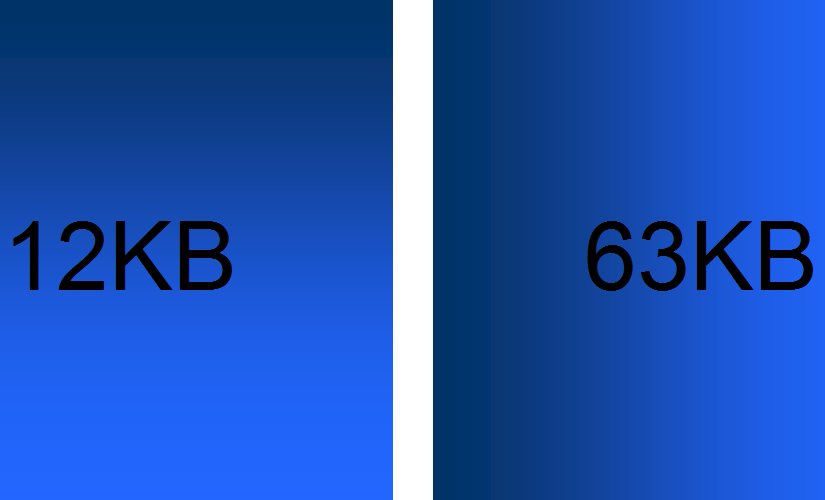

Take a look at the following beautiful gradients in GIF format:

The vertical GIF gradient weighs in at 12.6KB, whilst the horizontal GIF gradient weighs in at 63.9KB. (I have wpsmush running on here, but those are the sizes pre-upload. The larger image lost 2.08KB, whilst the smaller lost 37B).

Learning to Type Faster

You probably spend a lot of time typing each day.

Although we get a lot of passive practice with typing, we rarely sit down to work on our technique or drill for speed. As a result, we don’t really improve. You’ll probably understand this if you play an instrument.

If most of your time at work is spent attached to a keyboard, then increasing your typing speed is probably worthwhile. There isn’t a neat 1:1 between typing speed and meaningful productivity. Improving your typing speed will not solve all of your problems, as you hopefully spend more time thinking about what you’re going to type than you do typing. However, working on your typing speed might just speed up your Google searches, slacking, and inbox clearing enough to get more done each day.