I thought this was one of the more interesting issues I’d seen recently, so thought was worth sharing.

Issue

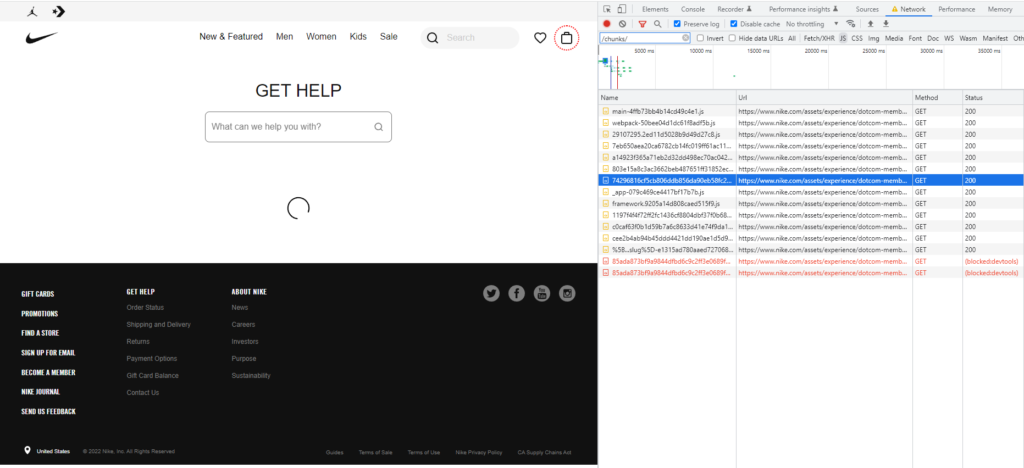

Looking at some of a client’s indexed pages, we could see that core content wasn’t consistently present in the recorded rendered DOM returned by the URL inspection tool.

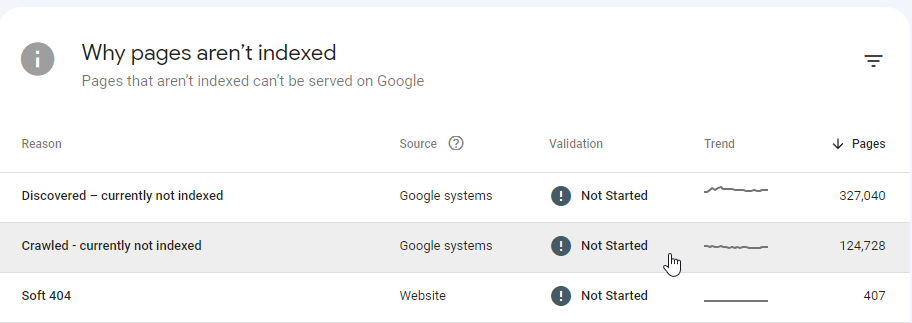

Although we can only get this information for indexed pages via Google Search Console, we can still reasonably infer that missing core content is likely a primary cause of why submitted URLs were being crawled but not indexed:

You may be thinking “ok cool, get that rendered server side, job done”

And you’d be correct.

But you’d also miss out on something neat.

Request Blocking

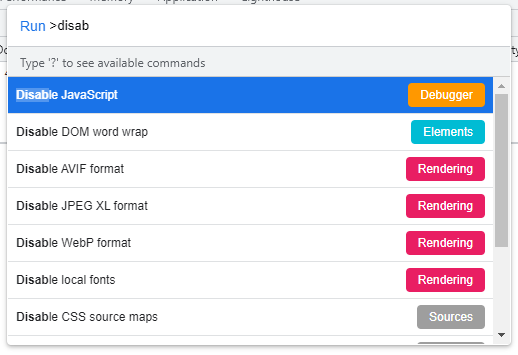

I think most SEOs are familiar with disabling JavaScript to see how a page would look without it:

This simulates a total failure of Googlebot to execute any JavaScript in order to render a URL.

While this could be an issue sometimes, it isn’t really the sort of failure we’re likely to see from Google when it’s attempting to index pages.

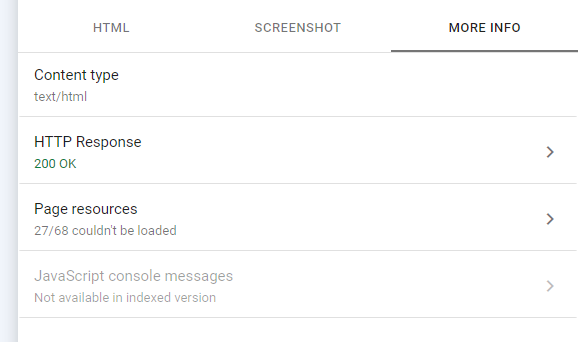

We’re more used to seeing partial failures or resource abandonment:

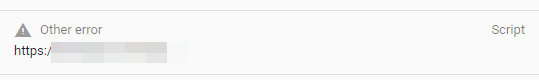

I am of course talking about our good friend, “Other error”:

Other Error: We didn’t think requesting this would be worth it, so we skipped the request in order to save money.

This is probably what people are talking about when they say “Render Budget”.

When I’ve mentioned simulating this by request blocking, several SEOs have recently asked me how to do it (“people always ask me”). Two SEOs have asked me this, but I’m sharing it with you nonetheless.

Request Blocking is one of the most useful diagnostic tools we have access to. It’s useful in this scenario and others (e.g. testing robots.txt changes, resource instability simulation).

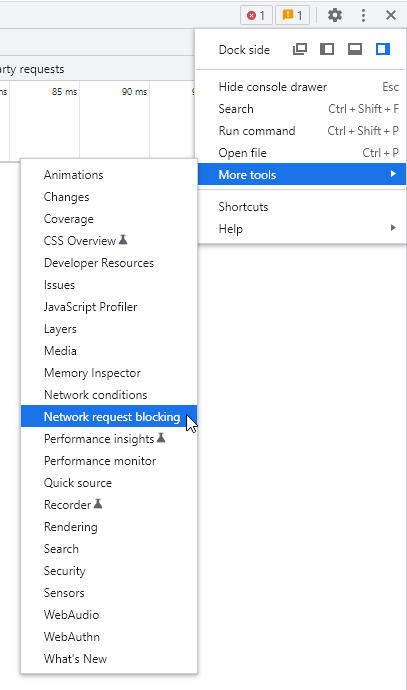

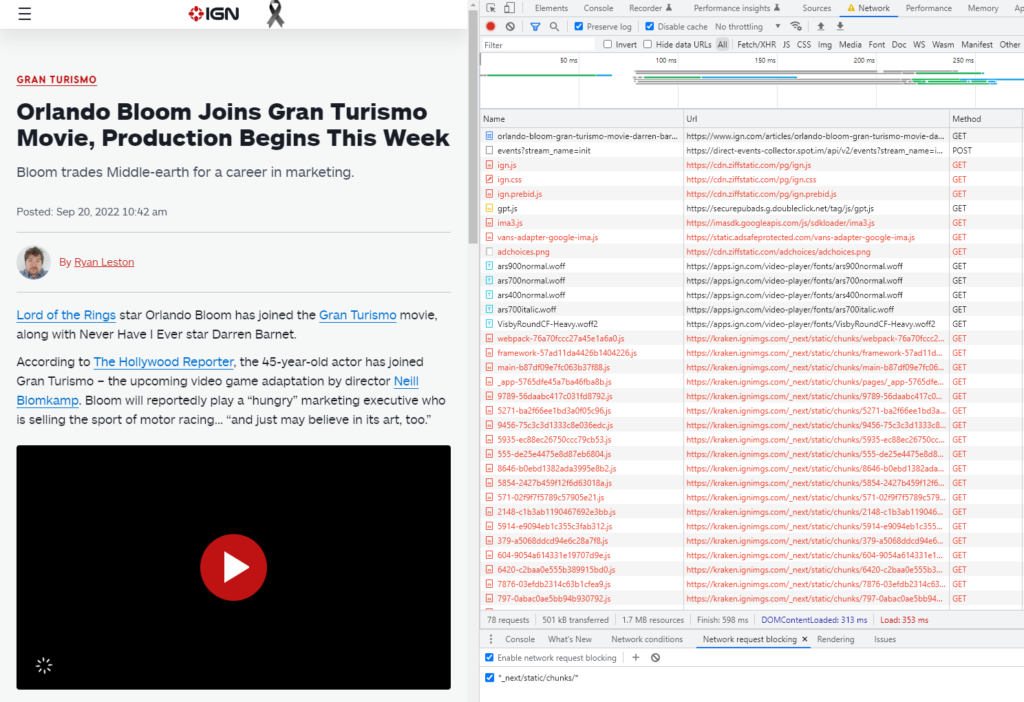

Here’s how you can do it in Chrome’s Dev Tools (F12) > More Tools > Network Request Blocking:

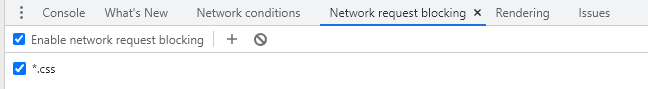

You can then write very basic patterns (* is wildcard) in the section that appears:

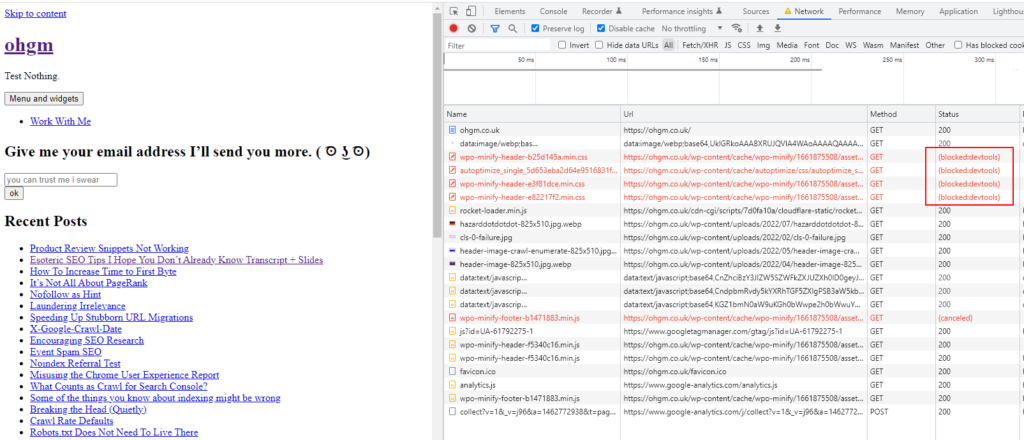

Here we’re simulating my website without CSS :

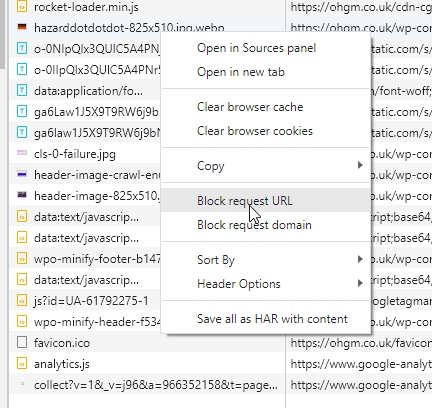

Using broad patterns is very much a sledgehammer approach. The more useful method for our purposes is blocking individual resources via Devtools > Network tab > Right Click > Block request URL:

And that’s it. You can now block individual resources. Remember to turn this off when you’re done.

What Do You Mean By A Render Gauntlet

I’d like you to open the following URL:

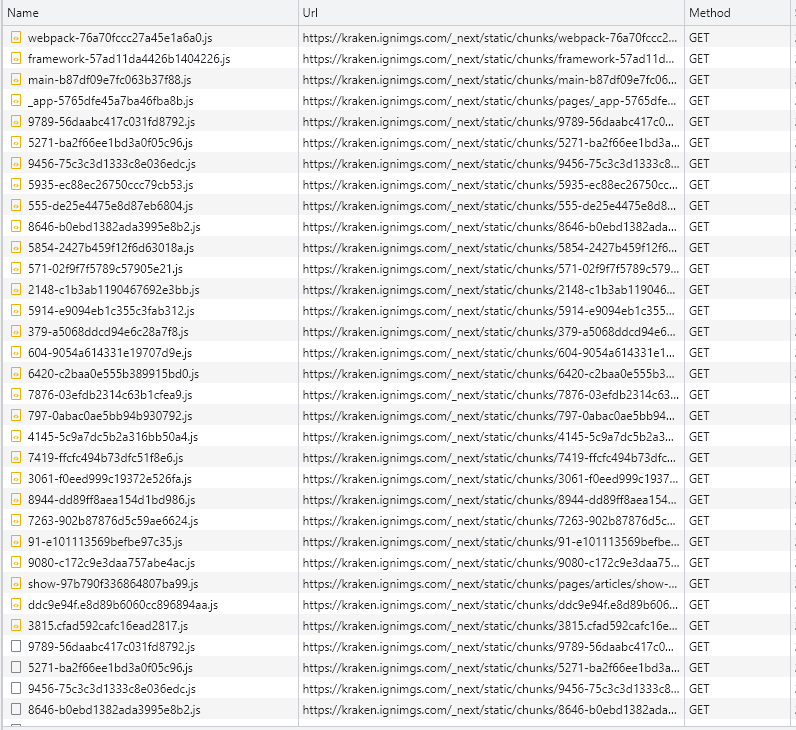

Then pick any one of the files under _next/static/chunks/ and block it:

Reload, and the main content will no longer render.

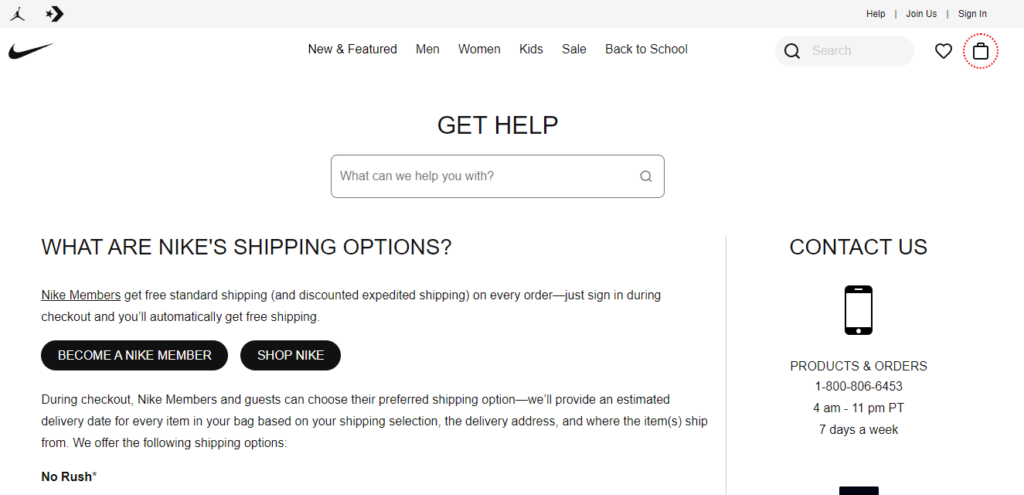

Our browser skipping a single resource would prevent the core content from loading. As for what’s going on with Nike here, it doesn’t look great:

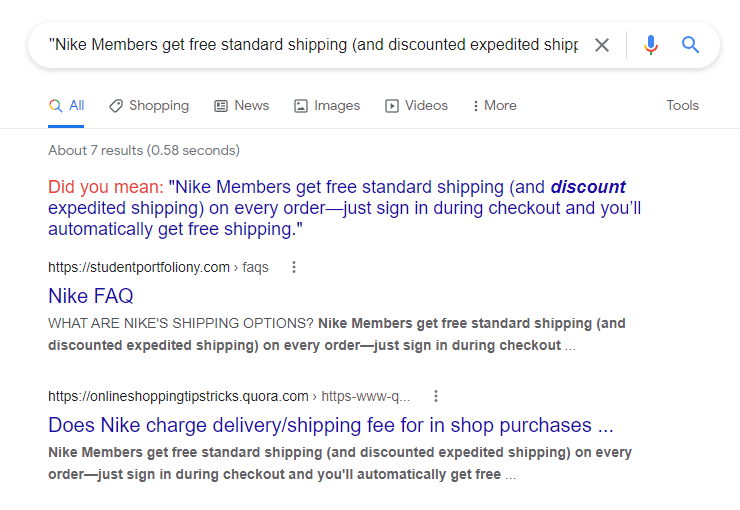

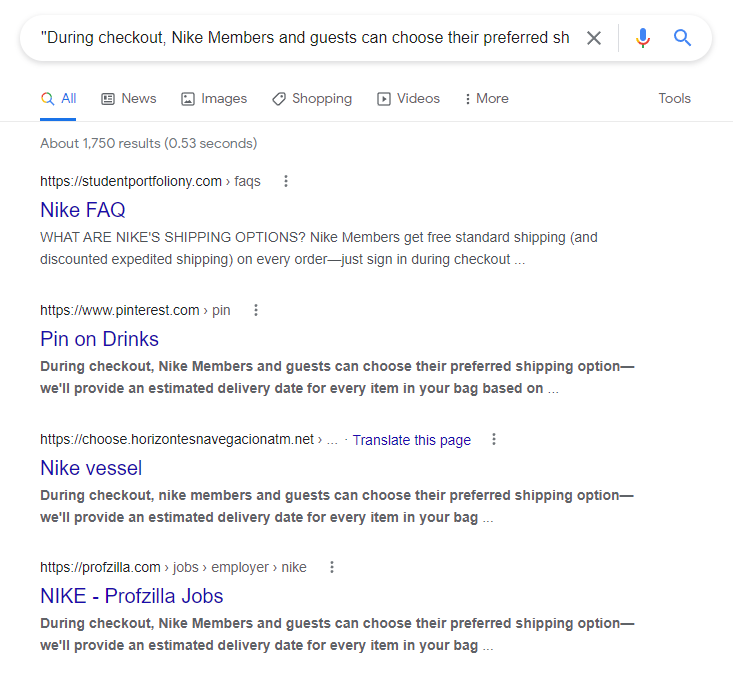

But…the page is indexed, where the correct content appears:

But this is just the meta description being returned in the initial HTML:

<meta name="description" content="During checkout, Nike Members and guests can choose their preferred shipping option—we’ll provide an estimated delivery date for every item in your bag based on your shipping selection, the delivery address, and where the item(s) ship from. We offer the following shipping options."/>Scrapers are going to keep outranking them.

This isn’t one of those posts where I point out something that’s less than perfect and pretend it’s a big deal in the hopes of drumming up business.

Let me be clear.

If you work for Nike, do not contact me.

How dare you.

For my client, the core page type required ~25 of these chunks to render.

A single point of failure would be bad, but each of these chunks is independently sufficient to ‘render’ the page worthless.

And the risk of Google deciding to skip a resources scales with the quantity of chunks.

This is the ‘gauntlet’ we’re asking them to run.

Again, this is probably where people are talking about ‘render budget’.

This isn’t an issue with the platform. NextJS by default does pre-render. This change seems to be the origin in the increase in file quantity:

To make the page interactive, all of these bundles have to load as they depend on each other to boot up React in the browser. Because all of these bundles are required for the application to become interactive, it’s important they are as optimized as possible. In practice, this means not over-downloading code from other parts of the application.

[…]

The new chunking implementation leverages HTTP/2 to deliver a greater number of smaller sized chunks.

See also: https://github.com/vercel/next.js/issues/7631

This is a significant and sensible improvement. But if the site happens to be doing everything it can to avoid pre-rendering, then the “gauntlet” is substantially lengthened for every request added by this more efficient chunking.

OK, So What?

What I hope the Nike example above illustrates is that there are scenarios whereby we are not merely asking Google to execute some JS to get to core content, but requiring that they download and execute 25, 50, 100+ separate script files in order to see basic content on a single URL (it depends how many unique templates there are across the application).

Relying on them doing so is very optimistic.

Note: you can only see evidence of this skipping behaviour in Search Console for pages Google has decided to index. Pages which come up empty, they aren’t likely to decide to index. It’s an unhelpful place to gather evidence.

However, I also have a sneaking, slithering suspicion that ‘TEST LIVE URL’ is starting to show a more realistic (un)willingness to download all of a page’s resources.

Counter-intuitively this would be a helpful change – because a cheerful diagnostic tool is not useful:

“Yep, everything looks great!” – ( ͡~ ͜ʖ ͡° )

Sharper SEOs than myself will note that the so-called ‘render gauntlet’ isn’t the reason these Nike pages aren’t being indexed. It appears to be a separate issue with the dynamic rendering config. It’s at least an example (for diagnosis purposes) I’m able to share.

A Very Standard Solution:

The solution, as ever, it to return content you want Googlebot to see in the initial HTML, like IGN do here:

Blocking any/all of them (try it!) does not prevent the core content from appearing, because it is present in the initial HTML file the browser received, just like the good old days:

As such, we don’t have to live in hope that Googlebot is (probably!) processing our core content, because it has no choice. Once it’s on the page, it’s there.

What now? (my demands)

I would like the URL inspection tool to return stored HTML for pages Google has chosen not to index. This would remove much of the guesswork of my job.

Understandably, this would not save Google money, so won’t happen (can you imagine trying to pitch this internally – “We know it’ll be expensive, but it would make Oliver Mason largely redundant, which I think we can all agree is for the best”?)

It would be good, though.

Aside

“Good” SEO Theory is action guiding. It should assist you in making broadly accurate predictions: “If we do X, then we can expect Y.”

This post was about understanding how something was failing.

This is a curiosity. What’s important as being able to demonstrate that something is failing and what steps could reliably fix it.

Our theory doesn’t have to do anything more (though it helps to be right occasionally).

Heroic.

Thanks Oliver. Great post!

One question remains though. If the client-side generated markup is there in the HTML retrieved from Google Search Console when clicking “View Crawled Page” (as opposed to using the Test Live URL feature), then can we safely assume that Google has seen it?

I would naturally assume so, but a year back we had a very strange issue where our breadcrumbs JSONLD wasn’t being picked up despite us seeing there in the “View Crawled Page” HTML. And it was server side rendered too!

It transpires the issue was because it was rendered server-side in the body, and upon “app hydration”, the entire body would be replaced within a few milliseconds, putting a fresh copy of the breadcrumbs JSONLD in the body. I’m sure you know what I mean by “app hydration”, but just in case, it’s when the “lifeless” markup returned by the server gets replaced by a “living, interactive” one.

Google was insisting only 1 or 2 of our pages (<1%) had breadcrumbs on them but we knew they all did. All the schema testing tools said our schema was fine. It was only when we moved it from the body to the head (so that it wouldn't be replaced during that "app hydration" phase) that Google then started picking it up reliably.

What are your thoughts on this?

Hi Max,

I think we’re usually safe to assume so if that’s what’s in the stored copy is used for indexing.

I’ve worked on sites before where hydration was overwriting the canonical briefly to a placeholder value (the homepage), before reupdating it to the correct value (the one rendered server side). Google was intermittently picking this up as a homepage declared canonical, but we could also see that was the case in the “View Crawled Page” HTML. The ‘live’ testing tools are usually very optimistic/persistent and will tend to render more than normal, so will say everything’s fine r.e. structured data.

However, I think there’s a strong possibility that a separate system is doing the checking for whether to report/return breadcrumb. If I look in Google Search Console for the site used as an example in this post, there are >600k URLs indexed. The templates all have breadcrumb which validates (and as far as I know is stable, like it’s been for you since moving to head), but only 61k are being picked up. I’m not certain this is an issue our end, but again something budgetary on theirs. Checking around, I’m only seeing breadcrumb under-reported across different sites so think this idea might be worth investigating further?

Cheers,

Oliver

Soo the exact route where these JS chunks are stored is different for every JS framework?

Great article. Thanks so much for putting it together Oliver. I’ve been struggling with Next JS rendering on an ecommerce site and this article gives me some ‘tools/theory’ to keep on improving it, so cheers.

Probably