Googlebot is one of the most impersonated bots around. Scraping tools will often use this particular user-agent to bypass restrictions, as not many webmasters want to impede Googlebot’s crawl behaviour.

When you’re doing server log analysis for SEO purposes, you might mistake a scraping tool’s ‘crawl everything’ behaviour for Googlebot behaving erratically and ‘wasting crawl budget’. It’s important that you don’t solely use the reported user-agent string for any analysis you might be conducting, but instead combine this with other information to confirm what you are seeing is actually Googlebot (and not you auditing the site with Screaming Frog).

In this post I’d like to offer snippets of code you can play around with to achieve this. You probably shouldn’t just copy and paste code from the internet into your command line.

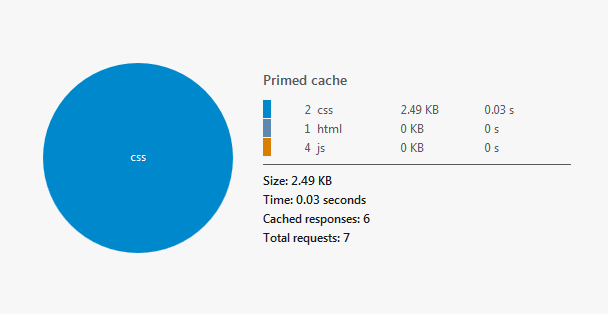

If your analysis is less than a million rows, it might be easier persisting with Excel, but you’ll miss out on the authentication magic (unless you’re willing to try this). The section below is recommended if you want to filter an unwieldy data set down to a manageable size.

Filtering Server Logs to Googlebot User Agent

First you’ll want to filter down to every request claiming to be Googlebot. This removes legitimate user traffic and cuts down on the number of lookups you have to make later. You need this information for your analysis, anyway.

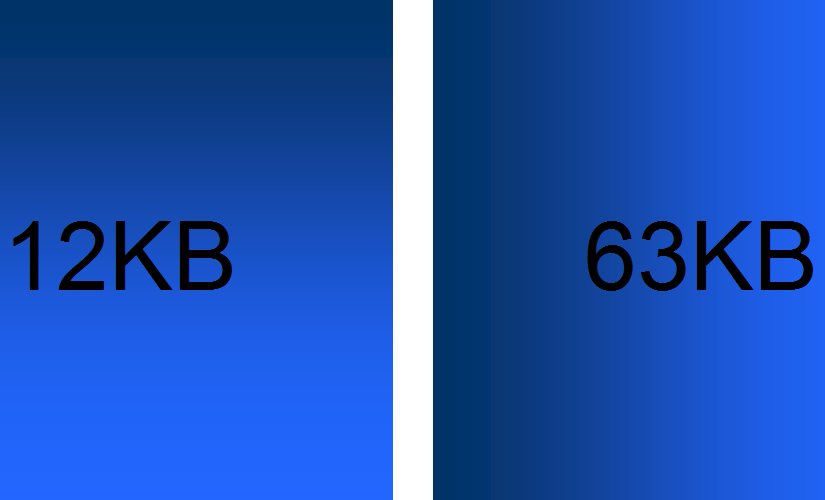

We’ll be using grep, which is a default utility in OSX and Linux distributions. Other utilities like awk or Sift will also do the job quickly. They’re both significantly faster than Excel for this task.

If you’re on Windows, get GOW (GNU’s Not UNIX On Windows?) to give you access to some of the commonly available tools on Linux distributions and OSX (alternately you can use Cygwin). You’ll need to navigate to the folder containing the files you wish to search and open a terminal window. Once GOW is installed, Windows users can hold CRTL+SHIFT and right click into the folder containing the file/s you wish to analyse, allowing you to open a command window.

Grep uses the following format:

grep options pattern input_file_names

If you’re stuck at any point you can type the following into the command line:

grep --help

Right now, we don’t need any of the optional flags enabled:

grep "Googlebot" *.log >> output.log

This will append each line containing ‘Googlebot‘ from the file/s specified into a file in the same folder called output.log. In this case, it would search each of the ‘.log’ files in the current folder (‘*’ is useful if you’re working with a large number of server log files). Under Windows the file extensions can be hidden by default, but the ‘ls‘ command will reveal their True Names. The double-quote characters are optional delimeters, but are good practice as they work on Mac, Windows, and Linux.

If you just wanted to filter a large dataset down to the Googlebot useragent, you can stop here.

Filtering Logfiles to Googlebot’s IP

You’ll now have a single file containing all log entries claiming to be Googlebot. Typically SEO’s would then validate against an IP range using regex. The following ranges are from chceme.info:

From To

64.233.160.0 64.233.191.255 WHOIS

66.102.0.0 66.102.15.255 WHOIS

66.249.64.0 66.249.95.255 WHOIS

72.14.192.0 72.14.255.255 WHOIS

74.125.0.0 74.125.255.255 WHOIS

209.85.128.0 209.85.255.255 WHOIS

216.239.32.0 216.239.63.255 WHOIS

We can use this information to parse our server logs with a hideous regular expression:

((\b(64)\.233\.(1([6-8][0-9]|9[0-1])))|(\b(66)\.102\.([0-9]|1[0-5]))|(\b(66)\.249\.(6[4-9]|[7-8][0-9]|9[0-5]))|(\b(72)\.14\.(1(9[2-9])|2([0-4][0-9]|5[0-5])))|(\b(74)\.125\.(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?))|(209\.85\.(1(2[8-9]|[3-9][0-9])|2([0-4][0-9]|5[0-5])))|(216\.239\.(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)))\.(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?) read more