This is a case I encountered recently, and I struggled with for a while. To keep things quick – I was working with a fairly baroque faceted navigation which had attracted a substantial amount of external links to category URLs containing tracking parameters.

The canonical tag was holding everything together link equity wise, but the crawl inefficiency was staggeringly bad. While we could use robots.txt directives, this would likely kill the site’s organic performance – no crawl, no canonical, no value passing external links.

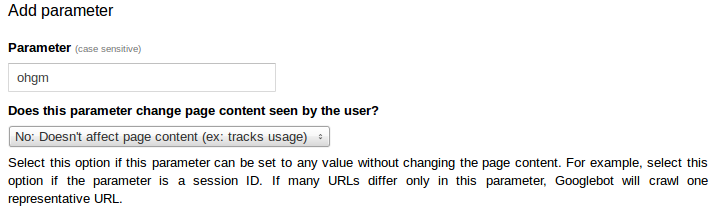

One final resort option (and I mean final) is Google’s Webmaster Tools parameter configuration. You make Googlbot explicitly aware of what each parameter does (or does not), so that they may crawl your domain more efficiently. As these were tracking parameters, the recently reworded “does not change content – crawl representative URL” option seemed like a good bet for improving crawl efficiency:

But there’s a worry you ought to be having right about now. Many SEO’s treat parameter configuration like robots.txt-light. It controls crawl, and when it comes to external links, you need crawl for them to mean anything. There are two opposing cases that I think can be made for how Google interprets this parameter configuration here:

1. Rules as Written

Googlebot will only crawl a representative URL. In the case of our example, this would be the tracking parameter free version. Since crawl is required to pass link equity, the value of all external links to the page is lost. Since crawl is required to pass link equity via canonical, the value of all external links to the parameter versions is lost.

2. Google is Quasi-Benevolent

Googlebot treats the external links to parameter URLs as if they were directed at the non-parameter versions . When an external link is encountered, the Webmaster Tools directive is checked, and a ‘for-like’ substitution is made. The external link is treated as if it was always directed at the short non-parameter version of the URL.

Webmaster Tools parameter configuration helps Google save bandwidth. It is in their interests to encourage the use of this feature. Failing to pass link equity would discourage the use of this feature, as site owners could significantly harm their site’s ability to rank by helping Googlebot understand duplicative content.

[yop_poll id=”10″]

There’s an easy test for this. I know which I’d prefer to be true, but which do you believe?

Nothing to see here, this is a test.

Hello!

Did you do a test for this?

:)

Tom

I also would like to know if this has been tested.