This will seem completely obvious to some of you. And it kind of is – but my brain hadn’t put it together yet. It’s also a nice opportunity for us to investigate the aftermath of the Indexing Issues Google’s had in 2019.

Imagine you’ve done a URL Migration, and your side of things has been executed competently, but the result is somewhat lacklustre. Maybe this will help.

Method

Google Search Console’s Internal Links Report is very enlightening. It shows you the internal links Google thinks a website has. This will in most cases diverge somewhat from reality.

As always in SEO, what Google believes is more important than what is.

Following a URL migration, there is a high probability of Google’s understanding of parts of a website being based in both URL formats. This can even persist when you’ve determined via server access logs that Googlebot has been crawling the redirects. In these cases, the value between the old and new URL will be split, driving mediocre performance.

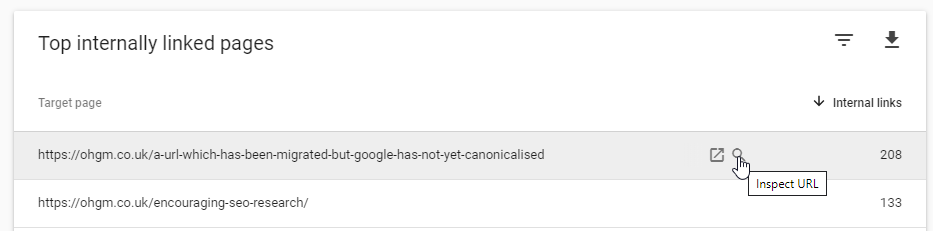

If you look into the ‘Top internally linked pages‘ report and consider the following:

- When a URL is successfully canonicalised, it disappears from this report as links are attributed to the canonical URL.

- As a result, this report is a good place to source URLs which have not yet been successfully canonicalised.

- The UI of the report allows you to inspect these not-yet-canonicalised URLs and submit them for indexing (usually happening within minutes).

When a non-canonical URL is canonicalised correctly it will disappear from this report (once the report refreshes). So to ensure the new URL structure performs as well as possible:

- Skim through this “Internal Links” list for URLs on the old structure.

- Submit these for indexing.

- This is very easy, if dull. Think about the decisions in your life that have brought you here.

The catch to these methods is the classic Search Console restriction – only 1000 rows of data are returned:

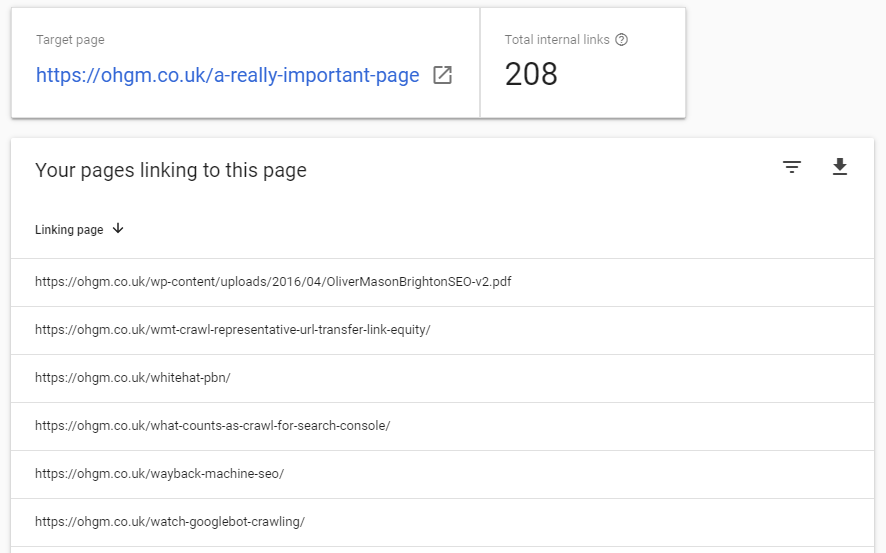

If you want to take this further (you probably do), you can examine the internal links attributed to your top, currently indexed pages:

This allows you to encounter URLs on the old structure Google has not yet canonicalised outside the 1000 most-linked-to.

- If any of these are from pages on the old URL structure, submit these old pages to further update Google’s perspective.

- If any of the internal link sources are missing (use a crawl to determine what Google isn’t reporting), you can submit the missing pages with internal links.

I would only do the latter if you are already punching well above your weight and need all the help you can get.

That’s all there is to it – poke the stubborn URLs Google Search Console surfaces in the Internal Links report by smashing that REQUEST INDEXING button.

(I realise it’s a bit counter intuitive to request indexing for something currently indexed that you want to not be indexed but this is the world we live in)

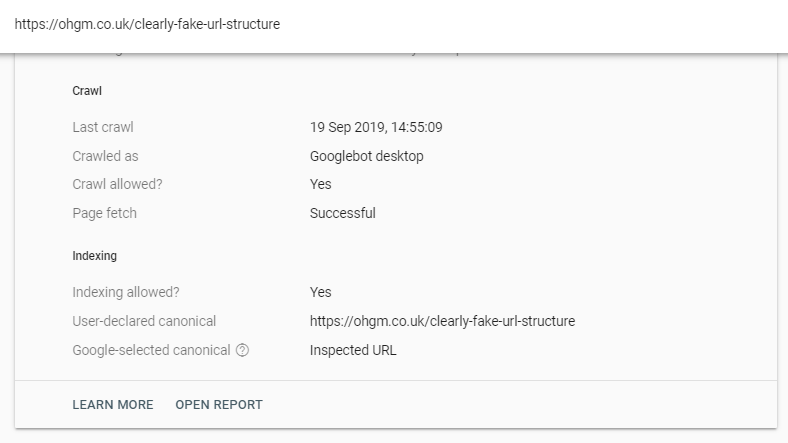

Indexing Bug Resurrections

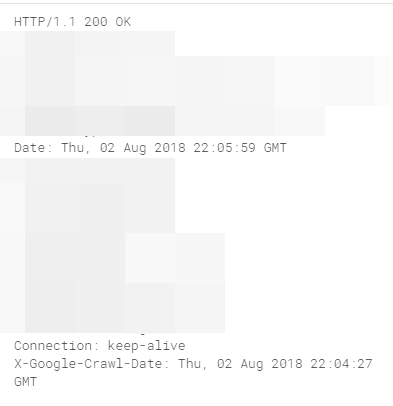

If you start inspecting some of these URLs which haven’t migrated and look a little too closely ( View Crawled Page > HTTP Response under MORE INFO) you may see some rather bizarre behaviour:

This is something I had previously been introduced to by chance when researching for the X Google Crawl Date post. Barry Hunter noticed the behaviour. This does not only apply to URLs which have been migrated, but it’s much easier to spot when it’s happening with them.

If you are actively trying to improve your pages, then Google ranking your page based on a version over a year old is a bad thing.

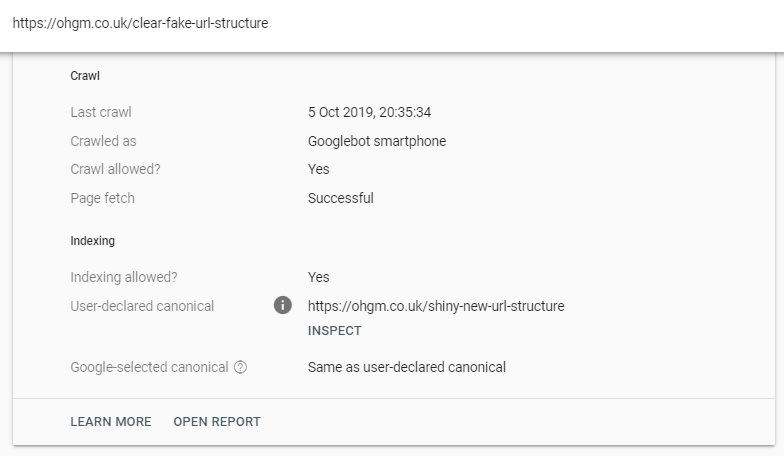

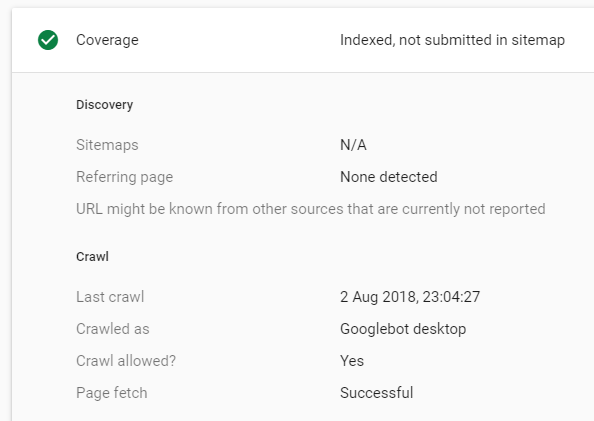

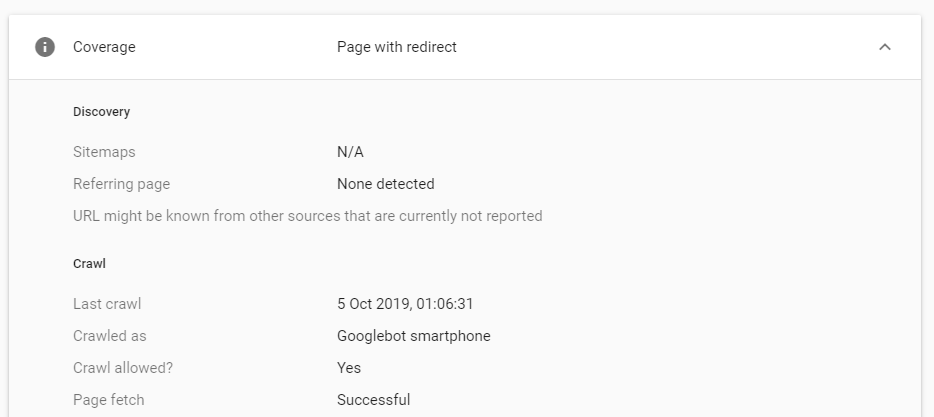

As you might expect, submitting this page for indexing will get it out of the index immediately (which is what we want) and the redirect counted:

Weirdly enough, the “last crawl” date doesn’t match the submission time as is usual, but the last genuine crawl date. This is probably a quirk of the UI reporting the last indexing of the redirect destination and not the actually submitted URL.

We know that this URL has been crawled and reindexed several times between August 2018 – so why is this ancient HTML showing up now?

The best speculation, I think, is that this has been caused by the indexing issues Google’s had this year. To explain this badly – Google dropped a not-insignificant percentage of its picture of “the internet”. Luckily, Google keeps periodic backups.

This gave them the option to either:

a) use these ancient backups to keep the link graph (mostly) going, or

b) accept that this part of the link graph has been lost and by the way your page has no chance of ranking any more for a little while, sorry.

They’ve opted for the former. We just have to keep smashing that REQUEST INDEXING button.

I have URLs indexed in Google for my personal site that go way back, to 2004 and possibly earlier. They have been migrated, canonicalised, submitted and beaten to death over the years. But there are still links to the URLs out there and Google keeps them in its index because of that…

Actually that’s not true when I went back and examined – am trying the smash Request Indexing solution now. Nice tip!