This post is about tunneling the requests Screaming Frog makes via SSH, and not about controlling a remote instance of the program. Why? In my case the destination of the tunnel is white-listed to access a staging server (e.g. a raspberry pi at home for when I am traveling). You can use the following to avoid setting up a proxy on a VPS (though you should, as it’s much easier than this method).

Setting up an SSH Tunnel

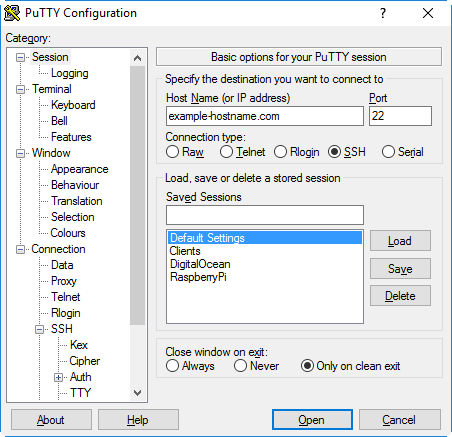

First, download Putty. Then, configure Putty as follows:

- Enter the the IP address/hostname and correct port number for SSH connections.

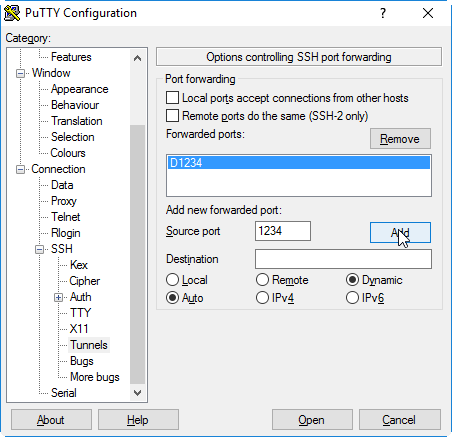

- Under Connection –> SSH –> Tunnels, define a new source port (pick a number e.g. 1234).

- Click Add, then click Open.

- Enter your credentials. That’s it. Outgoing requests over the port you’ve specified will be made from the remote server as long as the connection remains open.

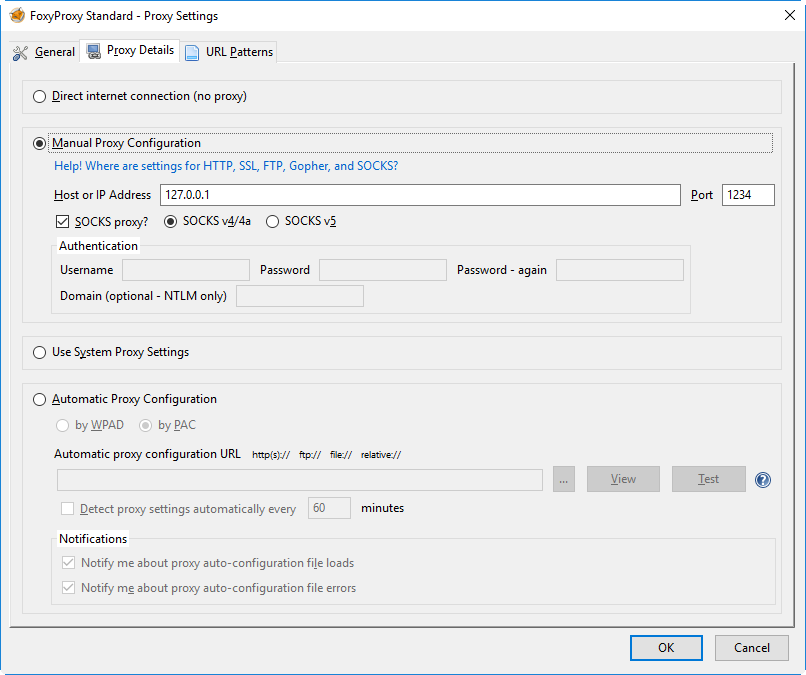

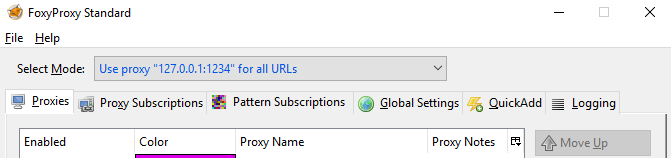

To check it’s working (and to give an example of tunneling) you can then use something like Foxyproxy (ff|chrome) to push your browser requests through the port specified:

To test it’s working – click this link, enable the proxy, then click the link again:

If the IP addresses are different, congratulations. If not, good luck.

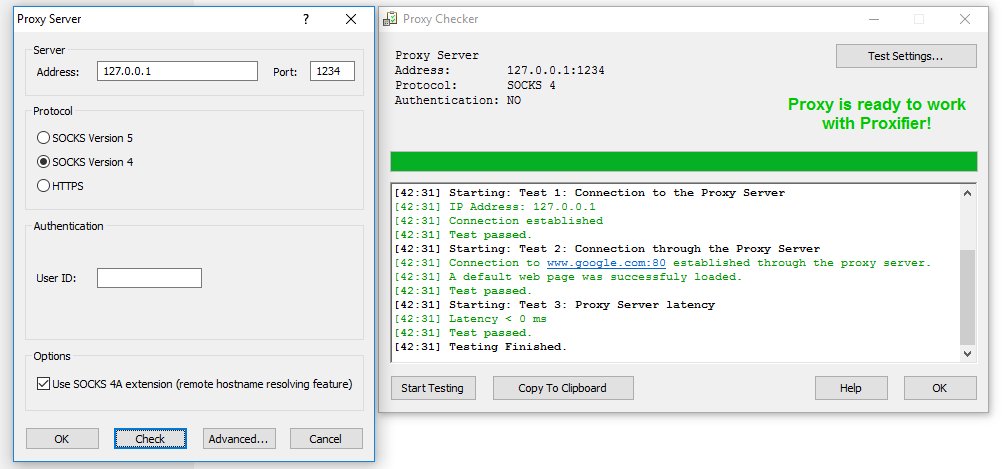

Run Windows Applications Over SSH

A proxifier does what you’ve just done in the browser, but for applications. For this example I’m going to show both ProxyCap and Proxifier. You set the proxifier to use the ssh tunnel you’ve set up, and shunt traffic from specific applications through it (so requests are sent by the server and back to your computer).

With the ssh connection in Putty still open, we set up the proxifier using the same settings as FoxyProxy:

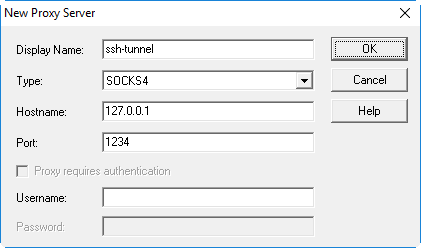

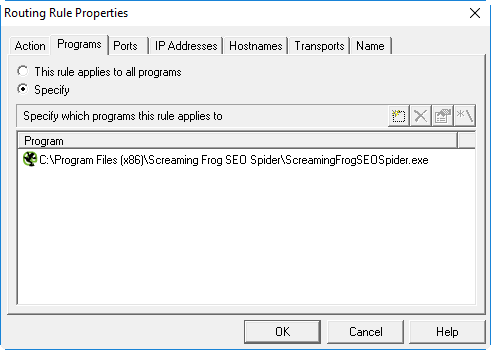

Today, I wanted to tunnel traffic for Screaming Frog SEO Spider, so I added the program to use the proxy I’d set up:

Screaming Frog of course works fine with these settings. But it still throws a Connection Refused when I try to crawl the staging server. Because for some reason it’s crawling from my local machine, and not the white-listed remote server.

Screaming Frog over SSH

This stumped me for a little while, but the solution turned out to be fairly obvious. If this page ranks then hopefully I can save you some minutes of frustration.

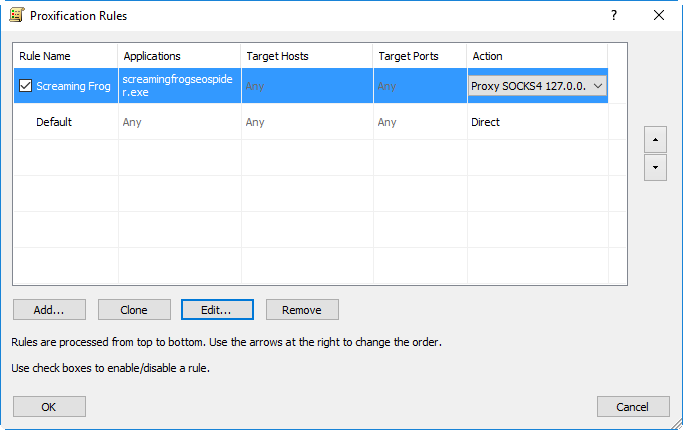

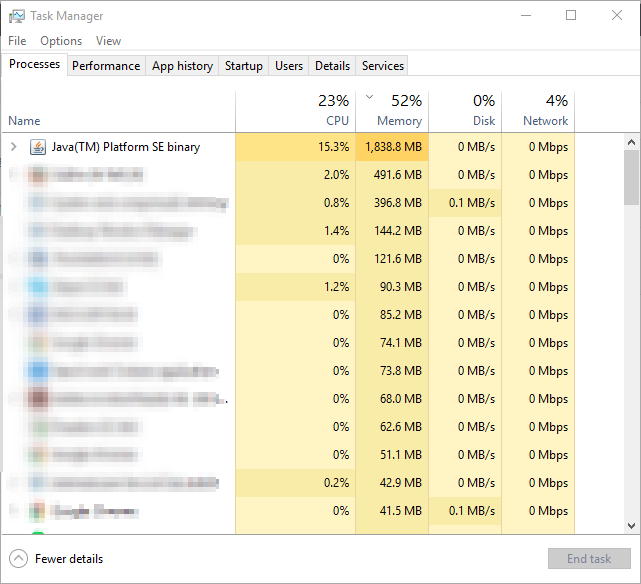

If you run crawls with any regularity you’ll be used to seeing this:

And after seeing this, the solution becomes obvious. It’s not the Screaming Frog application which is sending all the network traffic, but Java (which Screaming Frog runs on).

To make it work –> right click on the running Java(TM) Platform SE binary, and open the file location. This will give you the program and location you need to apply your proxifier rule to alongside Screaming Frog. Once this is done you shouldn’t have any issues in crawling.

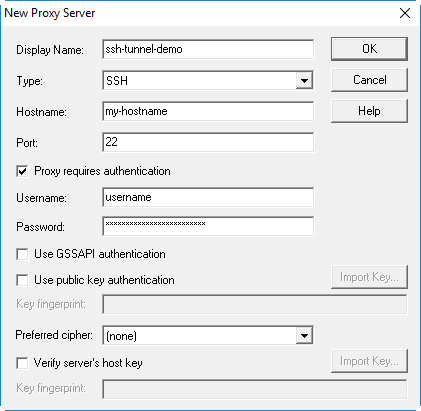

I’ve not had this run well with anything except ProxyCap or Proxifier so far. Both are paid, but have free trials and support Windows and OSX. I prefer ProxyCap here because you can give it ssh credentials instead, bypassing the need for Putty entirely:

I tried making this work with Sockscap, freecap & widecap, but these seem to launch a program rather than latching onto a running one. You don’t want a proxifier that launches a program on your behalf, since launching Java alone won’t actually do anything. Screaming Frog has to be in control. You want a proxifier which will step between any running instance of a program. There’s a difference (the difference is that one works and one does not).

Happy Crawling.